| Front-End Developers Your Day Is Done - AI Can Do Your Job |

| Written by Alex Armstrong | |||

| Wednesday, 07 June 2017 | |||

|

There is a sad fascination in going over lists of jobs that are close to being lost forever to AI or robotic systems - Go player, Truck driver, warehouse worker, taxi driver, delivery worker, front end developer. Yes front end developer. Tony Beltramelli of UIzard Technologies has just published a paper and a video of pix2code. The basic idea is fairly simple if initially surprising. Take a neural network and show it a user interface and train it to produce the code that creates the user interface. You can train the network for a range of different systems - iOS, HTML and so on. The trick to being multilingual is to use a special DSL (Domain Specific Language) that describes the UI and then is compiled to the target language. Hence the neural net is only learning a single language representation that is tailored to making learning easier.

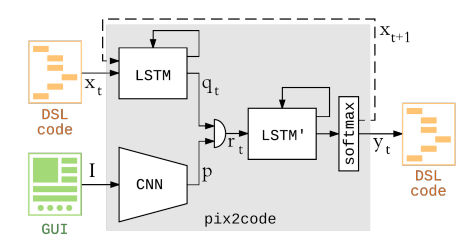

The architecture of pix2code is interesting. The visual component is handled by a convolutional neural network and the language part is handled by a Long Short Term Memory i.e. a feedback network. If the training is successful what you have created is a neural network that when shown a user interface will output the code that creates it. Don't believe it will work? Then take a look at it in action:

The abstract from the paper says: Transforming a graphical user interface screenshot created by a designer into computer code is a typical task conducted by a developer in order to build customized software, websites and mobile applications. In this paper, we show that Deep Learning techniques can be leveraged to automatically generate code given a graphical user interface screenshot as input. Our model is able to generate code targeting three different platforms (i.e. iOS, Android and web-based technologies) from a single input image with over 77% of accuracy. The first observation is that 77% accuracy isn't that high. It is an achievement because the task is very difficult - mapping a structured image to a language description. However for real world work it isn't really good enough. It might be possible to improve the performance by more training - in fact it is almost certain that the accuracy could be improved but this isn't going to be enough.

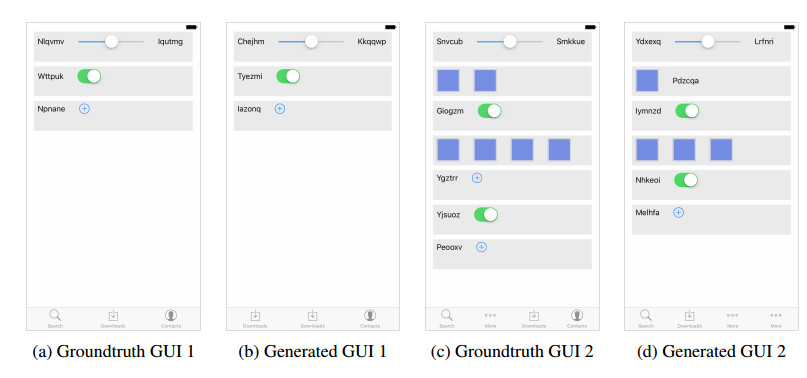

Two examples of input UIs and generated UIs. Notice that for c and d there is an error in the number of buttons. Also note that the system doesn't aim to reproduce the text labels - the content it generated at random. The code generated only describes the layout of the UI and not the way that it is wired up. That is, even if it was 100% accurate it only solves the most trivial of the problems of frontend coding. It puts together a description of what elements are in the UI, where they are positioned and so. It still needs a front end programmer to write the code that determines what happens when a button is clicked or some data entered. There is also the small point that if you use a drag-and-drop editor then you can get the code generated while the design is being created. In many ways the problem that the neural network is trying to solve is already solved it is just that, for reasons that aren't clear, drag-and-drop designers aren't a universal feature of front end design. This is particularly true in the case of HTML but even Android, iOS and XAML designers tend not to use the one that is available. So perhaps the robots aren't coming for front end programmer's jobs just yet but be warned this is the shape of things to come.

More Informationpix2code: Generating Code from a Graphical User Interface Screenshot Related ArticlesNeural Networks for Storytelling Automatically Generating Regular Expressions with Genetic Programming

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 07 June 2017 ) |