| Formation of Partnership On AI |

| Written by Nikos Vaggalis |

| Friday, 30 September 2016 |

|

Amazon, DeepMind/Google, Facebook, IBM, and Microsoft have announced the founding of a non-profit organization that to advance public understanding of artificial intelligence technologies and formulate best practices on the challenges and opportunities AI presents.

This collaboration comes into existence shortly after the inaugural report of the AI100 Study, which we reviewed earlier his week and sets out to address fundamental socio-economic issues it set out: Who is responsible when a self-driven car crashes or an intelligent medical device fails? How can AI applications be prevented from promulgating racial discrimination or financial cheating? Who should reap the gains of efficiencies enabled by AI technologies and what protections should be afforded to people whose skills are rendered obsolete? Because ultimately, as people integrate AI more broadly and deeply into industrial processes and consumer products, best practices need to be spread, and regulatory regimes adapted. Is it really a coincidence that Eric Horvitz, the man behind the University of Stanford based AI100 project the 100-year effort to study on the effects of artificial intelligence on every aspect of how people work, live, and play, is also the Interim Co-Chair of the Partnership, which wants to take responsibility for acting on the AI100 Study Group's findings.

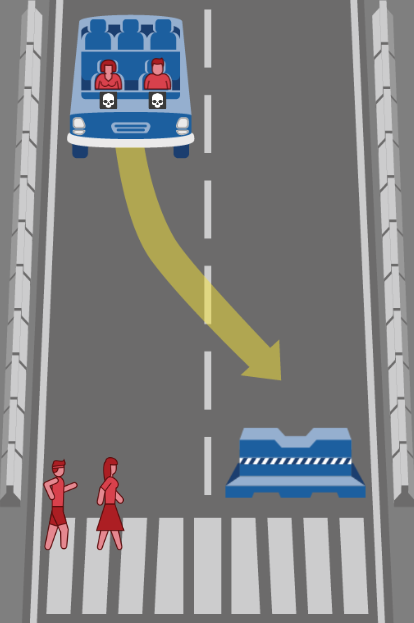

It's not rare, but also not customary, for players of the calibre of Microsoft and IBM, Amazon and Google, to forget their inter-antagonizing in order to work together on a common non-profit cause. As such the magnitude of this collaboration reflects on what's really at stake here, the need to persuade the public at large to adopting a positive attitude towards AI, considered a primary factor and of utmost importance in defining AI's success or failure. These concerns are truly formidable and span the boundaries of ethics, fairness, transparency, privacy, interoperability, collaboration between people and AI systems, trustworthiness, reliability, and robustness of the technology. The need to have a discussion on such concerns implicitly reveals the degree of the accomplishment achieved in the field of AI, great enough to render the current law, policies and regulations obsolete. But it's not just deprecation, but also the introduction of new dilemmas, especially moral and ethical ones. For example, how should a machine behave when faced with a situation that can potentially harm people?

Looking into the past, we are all well aware of what such big player collaborations can achieve, ranging from Information Technology to Environment and Waste management;like Microsoft's and Toyota's collaboration on Intelligent energy consumption, Mercedes Benz's and Facebook's on Social Driving, Coca-Cola and ECO Plastics' There's also another crucial aspect of the Partnership, albeit flying under the radar. The word "interoperability" and the sentence "collaboration between people and AI systems" are of great significance and hint at the development of a standardized protocol for machine to human communication. Work on common protocols and open standards are frequently the products or by-products of a collaboration. This is evident in the great amount of established international committees, the likes of ANSI, ISO, HL7, IEEE or W3C, while Microsoft and IBM have done it before, working on the standards of SOAP and XML. But in this case they'll work on a common language for machine to machine, as well as machine to human interaction;very important in order for AI to blend in with the human communities. It's also a critical move for safeguarding the public sector's need in not locking itself down to a single vendor but instead being free to work with machines or AI products which are able to communicate with either another brand's counterparts or humans. As such, Apple's refusal to enter the partnership raises many question marks. Does it want to be left out of the competition in order to sponsor its unique line of products and standards, like it is doing with iPhone chargers, headphones and plugins, sticking by its image as the innovative, luxurious, even snobby, loner who wants to do everything its own way? Elon Musk is also conspicuous by his absence, an absence that could be justified due to the large amount of money he has already put into the 'rival' OpenAI project which shares the same interests with the Partnership. Of course the power resulting from such a major corporation cooperation will, and with reason, scare people off. Will they really use this power for the greater good? The Partnership is quick in responding that it has no intention to lobby government or other policy-making bodies, as such easing the concern expressed in the AI100 report's closing stages : Like other technologies, AI has the potential to be used for good or nefarious purposes. Policies should be evaluated as to whether they democratically foster the development and equitable sharing of AI’s benefits, or concentrate power and benefits in the hands of a fortunate few One issue of even greater concern, which strangely enough neither the Partnership or the AI100 report address, is the debate on AI's influence on weaponry and the power its holder will assume, an issue which we investigated earlier this year in Autonomous Robot Weaponry. More InformationPartnership on Artificial Intelligence to Benefit People Guide by the British Standards Institute Co-Business: 50 examples of business collaboration Book Related ArticlesHow Will AI Transform Life By 2030? Initial Report The Effects Of AI - Stanford 100 Year Study Autonomous Robot Weaponry - The Debate Achieving Autonomous AI Is Closer Than We Think

To be informed about new articles on I Programmer, sign up for our weekly newsletter,subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Tuesday, 29 May 2018 ) |