| Why AlphaGo Changes Everything |

| Written by Mike James |

| Monday, 14 March 2016 |

|

We have a breakthrough moment in AI; one that most experts thought would take at least another ten years. An AI system has taught itself to play Go, one of the more subtle and human of games, and it has beaten the human world champion. To explain why this is the AI equivalent of the first moon landing we need a little background. When I first started working in AI research back in the late 1970s any suggestion of work on neural networks was treated as if you had just thrown away any career prospects you might have had. It was bad enough to be eccentric and work in AI but any contact with artificial brains marked you out as being as mad as Frankenstein. The reason was that talking about artificial brains was crazy hype and Minsky and Papert had, in 1969, rubbished neural networks in their incredibly influential book "Perceptrons". It was proved without any shadow of doubt that a neural network could not be made to solve even the most basic and simple problem. On a small subset of problems were "linearly separable" and hence could be learned by a one-layer, perceptron-based neural network.

It was widely believed that it had been proved beyond any doubt that neural networks weren't capable of doing anything useful. Only, of course, this isn't what Minsky and Papert had said. Their analysis applied to single-layer networks. It was reasonably widely known that a neural network with more than one layer was capable of solving the problems that Minsky and Papert had shown to be out of the reach of single-layer networks. So why were neural networks so out of favour? The answer is that while there were algorithms that could train single-layer nets, there was no way to train a multi-layer network. In 1974 Paul Werbos invented the back propagation algorithm, which solved the problem and basically removed the Minsky and Papert objection. Soon after this, the back propagation method was rediscovered by Rumelhart and McClelland and the whole idea of parallel distributed processing (PDP) burst onto the scene. This was the first time that neural networks became a popular approach to AI. You could take a multi-layer network and train it for simple tasks and it would learn, but at that time neural networks never got much beyond acceptable performance. It felt as if there was something missing from the mix. Slowly interest in neural networks declined and other less theoretically capable techniques were in vogue - Support Vector Machines, SIFT, HOG, particle filters, Bayesian networks and so on. All of these approaches had problems, but some of them provided much bigger success stories than the equivalent neural network approaches. It looked as if the neural network trained with backpropagation was a practical dead end. It wasn't even clear that neural networks and back propagation were biologically plausible. This wasn't obviously and clearly the way the mysterious human brain seemed to work - and while today we do have some plausible biological mechanisms for back propagation it still isn't clear. It seemed we needed something new and in 1995 something amazing happened. Gerald Tesauro, working at IBM, decided to combine a neural network with the rather underdeveloped field of computational reinforcement learning. The result was TD-Gammon, a backgammon playing program that achieved a level of play just below the top human players. It was so good that it changed the accepted wisdom of how you should play backgammon. It learned to play by playing itself and its only weakness was that it often played a poor end game - where logic can be applied. This was a breakthrough, but it largely went unnoticed. Because of TD-Gammon I changed my field of research to study reinforcement learning, but it quickly became clear that this was apparently a one-off. It seemed difficult to improve the game play in backgammon and in other games it just didn't work as well. We speculated that there was something special about backgammon that made reinforcement learning and neural networks fit together. Note: Gerald Tesauro in an email to me points out that "Any individual net would eventually reach a plateau, but it was always possible to get better performance by training a bigger net. The only drawback to this concept was a rough quadratic scaling in CPU time: doubling the NN size doubled the CPU time per game, plus the number of games to reach peak performance also doubled." It seems it was all a matter of thinking bigger and bigger even then. While all of this was going on a few pioneers who wouldn't give up, notably Geoffrey Hinton, worked on modifications to the neural network approach - the something new that might make it all work spectacularly well. In particular, Hinton, together with Terry Sejnowski, invented the Boltzmann machine, a network that could learn probability distributions. At first novel approaches to training neural networks seemed to be the way to make things work and then something unexpected happened. The Internet provided neural network implementers with lots of data - cat videos, photos of all sorts, lots and lots of texts, and so on. Computing power had grown to the point where the size of the data was not a problem and hardware like GPUs made it even more possible to crunch large datasets. Suddenly it all started to work. With enough data, and careful attention to how the network was trained, we have tamed the big problem of deep networks - they became trainable and overfitting was no longer a serious problem. The training techniques invented by Hinton and others helped make things work, but fundamentally it was the basic idea applied with care. Deep networks that were trained on large datasets demonstrated an impressive ability to generalize. That is, they didn't achieve their results by simply remembering the data they had been trained on. They learned concepts that were within the data which permitted generalization to data the network had never seen. This resulted in a resurgence of interest in neural networks - reborn as deep neural networks - around 2010. Today the best vision systems are neural networks, so called convolutional neural networks. Now we reach the final part of the story. Google's DeepMind has reunited neural networks and reinforcement learning to produce a set of systems that learn to play games, the latest and most impressive being AlphaGo.

DeepMind takes the deep neural network and trains it using a variant of Q-learning, one of the very earliest reinforcement learning methods. Now you know how we got here you might be wondering, so what?

Deep Blue There have been other examples of AI beating humans at games. Perhaps the best remembered was IBM's win over Garry Kasparov in 1997 with its chess playing DeepBlue. However DeepBlue was simply a brute force computation. It didn't learn to play chess; it was programmed to play chess by searching ahead and evaluating moves. It was a magnificent achievement, but it didn't lead on to anything better in any another area of AI. In fact soon after winning, Deep Blue was dismantled and the program was never seen again.

The case of IBM's Watson winning at Jeopardy in 2011 was a more general purpose example of AI than DeepBlue. Watson represents the alternative approach to AI - big symbolic processing. Watson stores lots of facts and uses rules to infer new facts from old. This sort of AI may have a use in the future, but at the moment it is confined to niche areas. You won't, for example, find Watson recognizing photographs or objects within photographs. Neural networks are general purpose AI. They learn in various ways and they aren't restricted in what they learn. AlphaGo learned to play Go from novice to top level in just a few months - mostly by playing itself. No programmer taught AlphaGo to play in the sense of writing explicit rules for game play. AlphaGo does still search ahead to evaluate moves, but so does a human player. All of this means that we are on the right track - neural networks, taught by us or by reinforcement learning, are enough. They can learn to do anything we can and possibly better than we can. They generalize the learning in ways that are similar to the way humans generalize and they can go on learning for much longer.

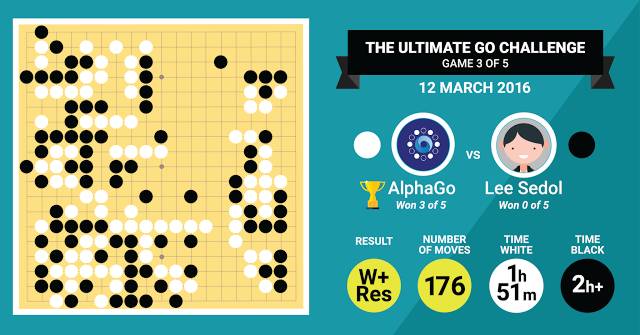

Go is a subtle game that humans have been playing for 3000 year or so and an AI learned how to play in a few months without any help from an expert. Until just recently Go was the game that represented the Everest of AI and it was expected to take ten years or more and some great new ideas to climb it. It turns out we have had the tools for a while and what was new was how we applied them. March 12, 2016, the day on which AlphaGo won the Deep Mind Challenge, will be remembered as the day that AI finally proved that it could do anything.

It is still early days and we need more powerful hardware and refinements of approach, but it seems clear now that strong AI is not only possible but also within our reach. What we need to do next is to start to build systems that make use of multiple neural networks. Convolutional networks for vision, recurrent neural networks for memory, language understanding and so on. There is still a long way to go, but we are certainly on track.

Related ArticlesAlphaGo Beats Lee Sedol Final Score 4-1 AlphaGo Has Lost A Game - Score Stands At 3-1 Google's AI Beats Human Professional Player At Go Google's DeepMind Learns To Play Arcade Games Microsoft Wins ImageNet Using Extremely Deep Neural Networks The Flaw In Every Neural Network Just Got A Little Worse Artificial Intelligence - strong and weak Alan Turing's Electronic Brain

Comments

or email your comment to: comments@i-programmer.info To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Facebook or Linkedin. |

| Last Updated ( Thursday, 01 November 2018 ) |