| Heartbleed Makes The Case For Managed Code |

| Written by Alex Armstrong |

| Thursday, 24 April 2014 |

|

Heartbleed has made a lot of headlines because of the trouble and cost it caused and is perhaps still causing. What we need to do is to learn the lessons it teaches. The message is very clear - use managed code.

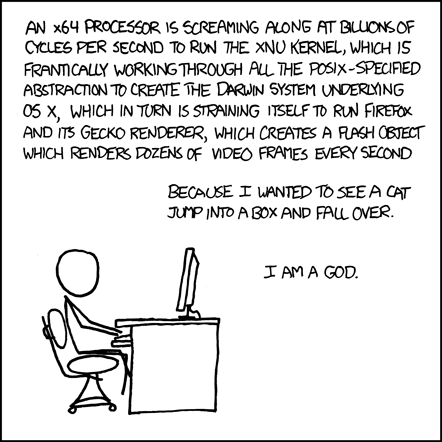

Heartbleed is a classic bug. It is the sort of bug you are told about when you first learn to program in languages such as C and C++ and it the sort of bug that you are told about when you start to question the rightness of using managed code. It is the tale told to young novices when they are in danger of being seduced by the dark side of C and low-level languages. It is how we frighten them back into the fold when they start to seek the efficiency and speed that are the promise of languages that are closer to the metal. The issue is about the primitive power that the bare metal offers compared to the layers of incredible sophistication that give rise to a modern programming language. But the argument is a difficult one and it is difficult to convince any programmer who suddenly learns that there is something lurking below the surface of languages such as Java or C# that they really don't want to go there. Back in the days when we didn't have a choice we wrote programs that had the full run of the machine. It wasn't much of a machine and we had to work hard to create the sorts of things that we take for granted today. Even the simple array was a tough cookie. You had to allocate the raw memory from a start address to an end address and you had to work out how big it needed to be to hold everything you wanted to store. You also had to arrange for the address arithmetic that let you find an element and store and retrieve something. In short, you had a lot of code to write to get what we consider the simplest data structure available in any modern language. When C came along, a lot of the work in implementing an array was automated, but it didn't move far from the underlying implementation that you would have used if you were programming in assembler. And given the machines we used were primitive and slow, any attempt to move away would have been resisted on the grounds of efficiency. In computing there is always this tension between sophistication and efficiency. As you abstract away from the bare metal you incur costs that result in your program running slower. And anyone who knows how the underlying machine works is usually horrified at how many layers there are between the algorithm you are trying to implement and how it finally plays out on the hardware. Abstraction

More cartoon fun at xkcd a webcomic of romance,sarcasm, math, and language

Even a simple one- or two-line C program that you can translate in your head into a ten-instruction assembly language program usually acquires hundreds of assembly language instructions when run through a typical compile/link system. Code bloat starts with C. Of course the problem with low level code is that it is very easy to get it wrong and in ways that an attacker can not only guess at, but also exploit. Ever since there were C programmers, they have been constantly warned that memory allocation and use errors are a very big and common danger. All you have to do is allocate the wrong amount of storage and an array will either run over the end and into other data or allow access to that data. Buffer overruns are dangerous because they provide access to memory outside of the array. The solution to buffer overrun is to simply put a check in before each array access - but this means a few extra instructions per array access. Buffer underruns, i.e. only using part of the allocated memory, allows access to whatever data was inside the array's memory before it was allocated. The solution to a buffer underrun is to initialize all of it before the program gets to use it. Again, this adds instructions to the program that can be seen as inefficient. The solution to these and other similar problems is to move to managed code. The term is Microsoft's and specifically refers to the .NET system, but it is reasonable to use it to mean anything that isn't unmanaged like assembler and C. Managed code comes with its own software execution environment, which is vastly more sophisticated than any bare metal machine. The runtime for managed languages can come with a Just In Time compiler or a Virtual Machine. It can even be statically compiled. But without its runtime environment it simply can't run on a bare machine. The runtime provides bounds checking and initialization on all data structures and it keeps the code well away from real memory allocations. A managed program never knows where it or any of its data are stored in terms of real memory addresses. Any attempt by an attacker to compromise the system is made more difficult because of this detachment from the real machine. You can, if you like, call the runtime a sandbox, but this comes with overtones of restrictions. Ideally a runtime should be more powerful than the bare metal and allow you to do things that would be difficult. The runtime should allow safe dynamic memory allocation with bounds checking and it should do garbage collection so that you can't leak memory and so on. All of this comes at a cost and it is this cost that C and C++ programmers typically don't want to pay and, yes, there are still lots of places where you really do need to get back closer to the metal but... if you can move to or stay with managed code you should. Recently Microsoft has been showing signs of pulling back on managed code in favor of faster more efficient C/C++ approaches and this is a huge mistake. Languages like C++, and especially C, should be marginalized for the simple reason - they ARE dangerous. Until Heartbleed this opinion could be regarded as abstract. "Yes of course my favourite language is dangerous, but only to a novice". The belief generally held was that dangerous languages were only dangerous in the hands of the incompetent programmer - WRONG. What you have been warned against can happen at any time and to the most experienced programmer. You only have to let your concentration wander for a few seconds and when you come back to read your code again it all seems perfectly OK. We tend to believe in what we have written until it goes wrong. Heartbleed is evidence that all of the scare stories that you have been told about unmanaged code are TRUE. And what is more it DOES happen and it happens in ways that matter. To be 100% clear Heartbleed happened not because a programmer screwed up, but because the language was too primitive to know better. If OpenSSL had been written in Java or C# then there could have been no Heartbleed bug. There would have been other exploitable bugs, no doubt, but none quite so "classic". It is well past the time for making such mistakes. Languages have to evolve away from the primitive hardware that they run on and they need to embody ever increasingly sophisticated abstractions. This means that they are likely to become less efficient as they evolve, but this isn't a simple relationship. The efficiency of a language is a matter of its implementation, not of its design or structure. At many points in language development some aspect or other was deemed to be too expensive to implement and then hardware got better or someone figured out how to do the job efficiently and the new feature gets itself added to most languages at their next update. Even languages such as JavaScript, which was notorious for its sluggish performance, has turned into something that can rival "native", aka unmanaged, code in browsers. It is not a question of how fast a language goes but how fast an implementation goes. It has to be admitted that algorithms implemented in managed languages are likely to have overheads but these are overheads that are due to the implementation of features that are not just desirable but essential. How can you possibly claim that not doing bounds checks on array access is one way to make your code more efficient when it is also the assured way to make it exploitable? If you have ever argued that language X is better because it is faster then you are really missing the point. Heatbleed teaches us that unmanaged languages are defective in practice as well as in theory.

More InformationRelated ArticlesHeartbleed - The Programmers View Open Source Better Than Proprietary Code The True Cost Of Bugs - Bitcoin Errors GOTCHA - No More Password Hacking Stick Figure Guide To AES Encryption Android Security Hole More Stupid Error Than Defect Smartphone Apps Track Users Even When Off

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Friday, 25 April 2014 ) |