| Google Glass The Mirror API - How It Works |

| Written by Mike James | |||

| Saturday, 06 April 2013 | |||

|

Timothy Jordan gave developers at SXSW a sneak peek at the Google Mirror API, which is what they'll use to build services for Glass, and now you can see it as a video. What it reveals is that the Mirror API has more structure than you might expect.

Everyone seems to be as much frightened by Google Glass as they are excited by it, but what of the ways in which we can program it? Surely it is going to be complicated interacting with all that sophisticated design - speech input, speech output, the gesture based UI and so on. Where to start? The truth of the matter is that the Mirror API only permits a very limited range of interactions with the hardware and the user, but it is probably more than enough for everything but the most radical innovative app that needs to talk directly to the hardware. In brief the Mirror API is based on a simple model that makes it a lot like creating a web app. It is described reasonably well in the video. Unfortunately 50 minutes to describe a simple API is a bit long and there is a tendency to wander off topic. After a brief promo for Glass and a discussion of why its all really, really exciting, no mention of scary, we get to an interesting demo of Glass, at around 10 minutes into the video, with an attempt to show what it is like to wear it and use it. The importance of this section is that it details the user interface and how you will be able to interact with with the device. This may not be as impressive as the promo video, but it is much more informative in that it shows Glass taking realistically poor photos and how the user has to swipe and tap at the arm of the glasses to give gesture commands. The meat of the talk - the API and how it might be used - starts at around 15mins. The Mirror REST API is explained in quite a lot of detail including the POST, PUT and GET headers which is perhaps more detail than actually required. Of course, you can use any language that has the ability to work with HTTP on the server and the details of exactly how to issue a POST, PUT or GET vary.

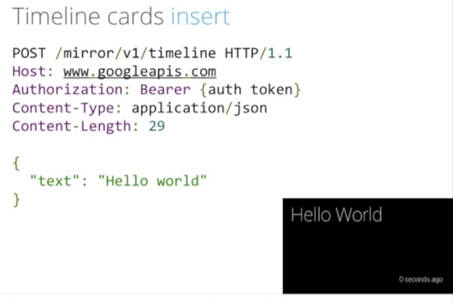

The API is surprisingly high level and works in terms of "Timeline" cards. These act like the basic unit of interaction with the Glass user. They work like tiny HTML pages downloaded from the server and displayed via Glass and they have simple menu options that the user can select via a tap. You can insert cards into the user's information stream in response to a subscription notification and the user can scroll through the timeline with a swipe. You can also add services that allow cards, e.g. photos, to be shared to new services so the interaction can be two-way. All of the processing, interaction and generally clever processing is performed on the application's server, which simply sends new Timeline cards and receives any that are saved to the server. This is the same sort of client-server behavior you find in an Ajax app, only much simplified. The full Mirror API gives you nothing more than Timeline cards, menu options on the Timeline cards, share entities and subscriptions and this is all. It is all very simple, but it might be more than enough when you add the built-in services that Glass provides. When you add it to the voice input capabilities of Glass and the ability to take photos, things become more interesting. For example, the user could voice dictate a reply to a question or send some voice dictated requests to the server. The user could take a photo, say, in response to a request from the server and then share it and so on. The real question that is unanswered at the moment is what else there might be in the API above and beyond the Timeline card? There probably isn't going to be any deeper level of integration with Glass - no lower level interface that allows you to get at the camera or audio system directly. This isn't unreasonable from the point of view of security. The higher level abstraction that Mirror provides treats Glass as a simple I/O device organized as a sequence of Timeline cards, which is probably enough for most applications.

Once you have understood the way that Timeline cards provide a two-way communication method between your server and the user it all makes sense. Any real work that your app performs has to be done on the server. So now you know - what do you think you could do with Glass? More InformationRelated ArticlesGoogle I/O 2013 Website Opens With Lots Of Fun Opportunity To Become a Glass Explorer Sight - A Short Movie About Future AR Google Glass - The Microsoft Version Google Glass - How it Could Be

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Saturday, 06 April 2013 ) |