| DARPA Wants Analog To Boost Super Computer Performance |

| Written by Harry Fairhead |

| Friday, 27 March 2015 |

|

The first practical computers were analog computers and now DARPA thinks that we might be able to speed things up by bringing them back.

The computers that we use today are digital and the idea of an analog computer is pretty much forgotten, or at best an item in a history book. What exactly is an analog computer? The answer is that it is a computing device that provides answers by physical modeling of the system of interest. For example, if you have a road network and you want to find the shortest route between two towns then with a digital computer you build a representation of the road network and search each possible path and pick the shortest. This takes about n2 operations where n is the number of towns. Now consider the analog approach. Take a ball of string and cut pieces that are the the distance between each pair of towns but in inches not miles. Tie the string together to make a physical model of the road network. Now to find the shortest distance between two towns pick the string network up holding the knots that represent the two towns and pull apart. The string that stops you, i.e the first one to go taught, is the shortest path. Ignoring the time to make the model, this analog algorithm works in fixed time irrespective of the number of towns. This is the power of the analog method. You don't have to do the computation because the universe does it for you. The trouble is that analog computers had their day when mechanical construction was the rule. Multiplication involved gear ratios and other mathematical operations such as integration needed complicated looking pieces of machinery that looked like something Rube Goldberg would have created.

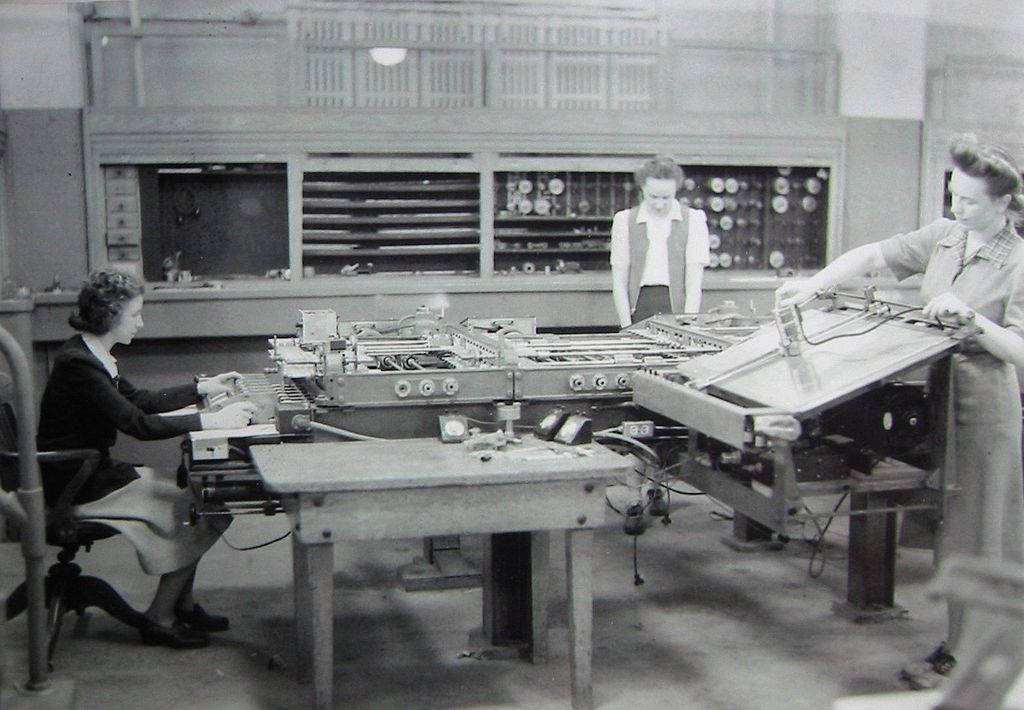

The differential analyzer at the Moore School circa 1942. With the rise of integrated circuits analog computers had a revival because it was possible to compute functions using a standard building block - the operational amplifier or op amp. This one circuit could add, multiply, integrate and differentiate and all you had to do to create an analog program to solve any differential equation was to wire them up correctly. Of course, this isn't as easy as being able to simply write a program, but it was the way that the first digital computers - Colossus and ENIAC were programmed, but they moved on to stored program designs that got rid of the need to rewire each time the program changed.

An early 741 op amp - Teravolt licensed under CC

Today analog computers are mainly used for fixed tasks - like implementing a radio or coding circuit. You don't encounter general purpose analog computers and this seems to be what DARPA wants - a general purpose analog computer. The Request For Information states that what is of interest is:

In principle it isn't difficult to see that analog computing could be useful in predicting any system that can be represented by a differential equation - the weather, the progress of an epidemic, design of optical systems and so on. In fact, it make you wonder why we have spent so much time and effort on building digital super computers for tasks such as weather prediction when an analog computer would do the job much faster. The answer is that at the moment digital is still easier, more flexible and in most cases more accurate than an analog computer. To be successful any new approach would have to deal with these problems.

More InformationPetaflops On Desktops: Ideas Wanted For Processing Paradigms That Accelerate Computer Simulations Related ArticlesSlime mould simulates Canadian transport system

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Friday, 27 March 2015 ) |