| Microsoft Translator API |

| Written by Sue Gee | |||

| Saturday, 02 April 2016 | |||

|

Microsoft has released a new version of its Translator API. This provides developers with the same speech-to-speech facilities as those used in the Skype Translator and in the iOS and Android Microsoft Translator apps.

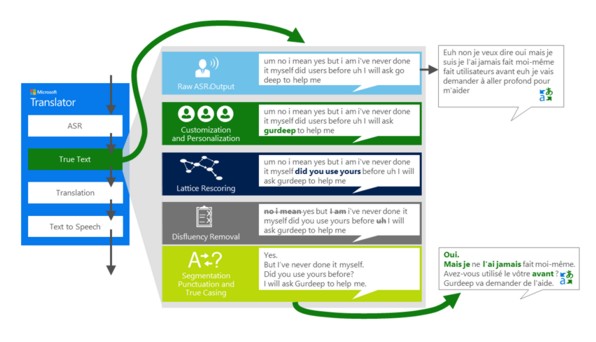

The blog post announcing the availability of the new Microsoft Translator API Microsoft describes it as: the first end-to-end speech translation solution optimized for real-life conversations (vs. simple human to machine commands) available on the market. It also explains how it works using AI technologies, such as deep neural networks for speech recognition and text translation and outlines the following four stages for performing speech translation.

(click to enlarge) Microsoft Translator covers two types of API use and integration: 1) Speech-to-speech translation is available for English, French, German, Italian, Portuguese, Spanish, Chinese Mandarin and Arabic. 2) Speech-to-text translation, for scenarios such as webcasts or BI analysis, allows developers to translate any of these eight supported conversation translation languages into any of the supported 50+ text languages. A two-hour free trial is available. This provides 7,200 transactions where a transaction is equivalent to 1 second of audio input and is the same as the free monthly tier. Beyond this subscriptions are are available:

The prospect of being able to communicate without language barriers is becoming ever more a reality and the more we use it the better the facility will become. Ironically there's a error in the sample Microsoft uses in its artwork above - Gurdeep is the object of the final sentence in the English and becomes the subject in the French. This sort of error will quickly be corrected by machine learning as more data becomes available.

More InformationMicrosoft Translator Speech Translation API on Azure Marketplace Related ArticlesSkype Translator Cracks Language Barrier Skype Translator - Communication Without Language Barriers Near Instant Speech Translation In Your Own Voice Speech Recognition Breakthrough

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Saturday, 02 April 2016 ) |