| Scrape Away From KimonoLabs |

| Written by Nikos Vaggalis | |||

| Friday, 11 March 2016 | |||

|

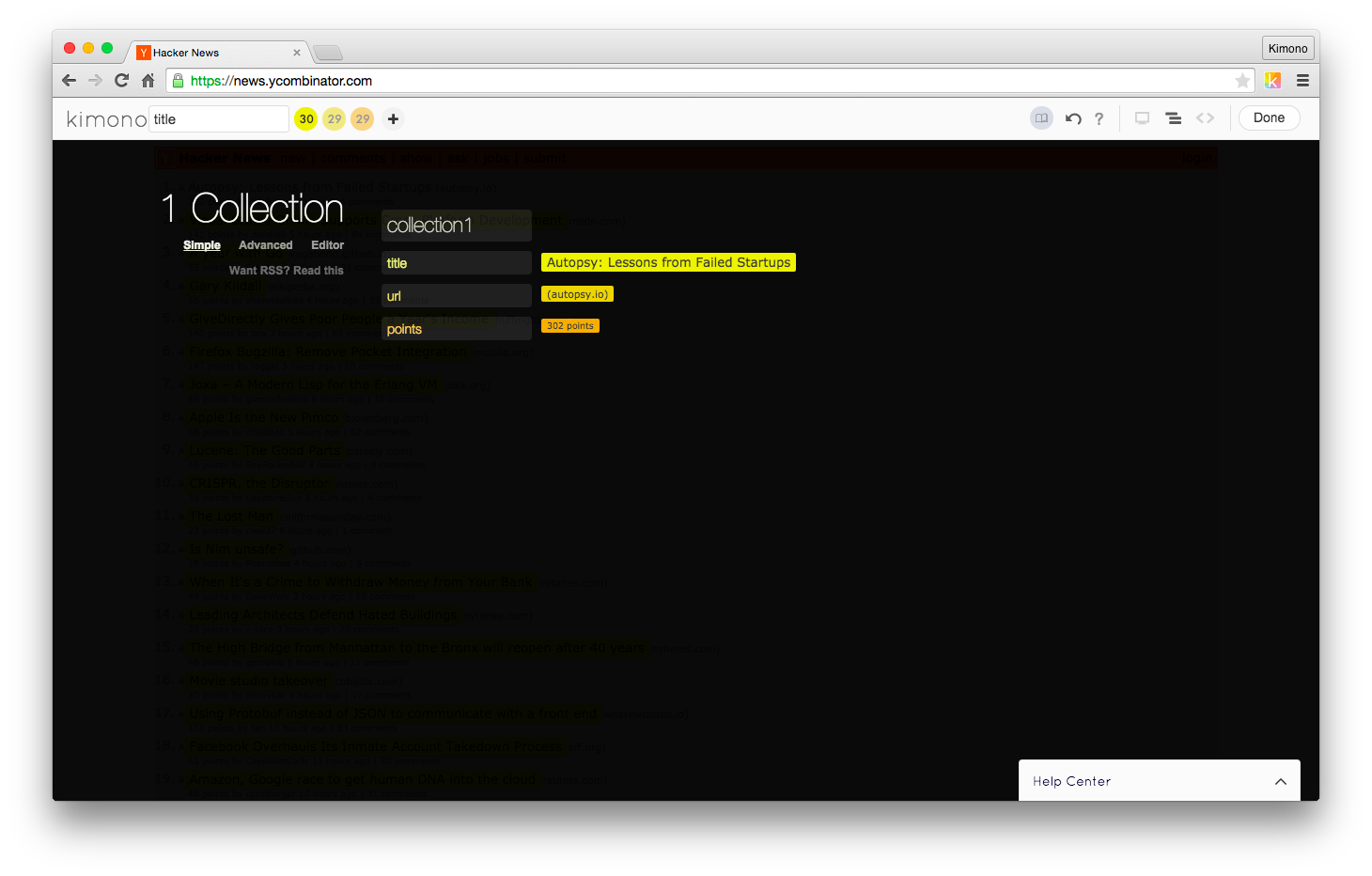

A recent startup, KimonoLabs, established in 2014, set out to change the scraping landscape. Its recently announced takeover by Palantir will shut down its data scraping service. Kimono has produced a desktop app into which devs can import its APIs before the end of March. Picking up on the growing trend, driven in part by the growing IoT applications Kimono aimed to build web based RESTful APIs around them that would be accessible by developers and non developers alike. Its early successes included integration with the Pebble smart watch for results of the 2014 World Cup. Kimono's API's promised to take the burden off the user, who otherwise would had to write code and use tools such as Scrapy for his scraping tasks instead. In short Kimono did the scraping for you and provided the results via a standard API. But delay in establishing a sustainable revenue plan was probably the major reason for its takeover by Palantir. When the platform began operating, signing up was free and access was unlimited to everyone. The plan was to charge users for having private APIs or charge them depending on the quantity of APIs they had deployed. However, there was no premium revenue collection scheme in place, charging business for using the platform, for example. Pratap Ranade one of Kimono's co-founders explains:

Palantir, although absorbing Kimono as an entity, will shut down its data scraping service. This of course causes a lot of grief and inconvenience to the developers already having deployed software based on Kimono's API's.

Kimono state that the app has similar functionality to the online web based one, but unfortunately it lacks a lot. Previously we benefitted from a web based endpoint able to scrape data at predefined intervals, emits them classified and in JSON format. Data could be consumed by 'client' applications residing in a VPServer, that performed post processing and imported the resulting data into a database automatically and without user intervention. Compare that to the desktop based Windows or Mac (there's no Linux version) application, where the user has to manually start the application, whenever that is possible; kick off the scraping task, grab the exported JSON data files and transfer them from local PC to the VPS by FTPing or other clever but involved means. Even worse the desktop app would not even run on my Windows 7 machine, dying with an exception instead. Update:Ryan Rowe (co-founder of Kimonolabs) has pointed out that you can have a web based endpoint with the desktop app by using its Firebase integration. This will automatically put the scraped data to the cloud and provide JSON endpoints for VPS or other device based apps to access. Firebase has a generous free tier with 1GByte of storage and 10GBytes of data transfer up and 100GBytes down. So as long as you stay within these limits things aren't bad. However basing an application on a desktop app that isn't supported after March obviously doesn't provide any long term development prospects. Thus alternatives, new or already well established ones, are looking forward to attracting Kimono's developer client base into their platforms. One of those services is Scrapinhub's Portia that is offering a Kimono2Portia migration service where you just have to fill in your Kimono credentials and let it import your Kimono API's into their platform Import.io which taken the initiative of setting up a page Great alternatives to every feature you'll miss from Kimono Labs. While it does cover a wide range of options it does point to the its own offerings of an entry level automatic extraction as an online API, and its more 'advanced' point and click one as a desktop app, which is therefore prone to the same difficulties as the KimonoLabs app. Other alternatives include Parsehub, Diffbot or Apifier. All offer more or less the same functionality, something that makes choosing the right one a task decided on the points. In this arena however, Import's free Udemy course on How to extract data from the web using import.io does gve it a competitive edge. At least there are plenty of alternatives that will hopefully render Kimono's absence a bit more bearable.

More InformationRelated ArticlesGoogle Restricts Autocomplete API

To be informed about new articles on I Programmer, sign up for our weekly newsletter,subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Friday, 11 March 2016 ) |