| How Fast Can You Number Crunch In Python |

| Written by Mike James | ||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, 08 February 2016 | ||||||||||||||||||||||||||||||||||||||||||||||||

|

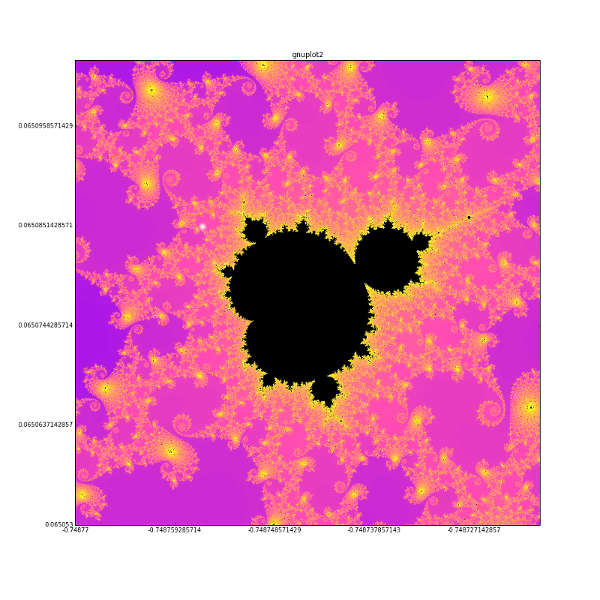

We are all more or less committed to using high-level languages, but there is always a background worry that they might not be fast enough for some tasks. An interesting set of benchmarks shows how to use Python to number crunch. The benchmark in this case is a very simple, but time-consuming, computation - the Mandelbrot set. What is really interesting is not so much the task, think generic number crunching problem, but the different technologies that Jean Francois Puget, an IBM software engineer, used to compute it.

Starting off with "naive" Python, triple-nested loops scan x, scan y and iterate the function, to get a baseline. This is something like 70 times slower than C code. The naive code uses lists to store the results so the next "optimization" changes lists to Numpy arrays and explicit loops. It turns out to be slower, about 110 times slower than C, even though the data structure, an array, is simpler and in other languages you would expect it to be faster. If you want arrays to be faster in Python then you need to switch to a compiler that can take advantage of its fixed regular layout. Numba, a JIT compiler, and Cython both turned in times comparable to simple C code. So at this point it looks as if the language isn't the issue, but rather the way it is implemented. If you have a good enough compiler then a high-level language can be as fast as C, which can be regarded as a low-level, machine-independent, assembler. The next question is can a high-level language speed things up by using advanced abstractions which take advantage of the hardware. For example, can vectorization take advantage of SIMD type operations to make things faster than a purely sequential C program? If the vectorization is provided by Numpy the answer is that it is better, but not that much, at about 3 times slower than sequential C. It is better than non-vectorized Numpy and naive Python, however. Numba Vectorize gives a similar performance at 2 times slower than sequential C. Looking outside the core language, TensorFlow, Google's AI package, can be used to vectorize the calculation. Using just the CPU TensorFlow turns in about the same sort of speed as vectorized Numpy, i.e. about 3 time slower. OK, what about using a GPU? PyOpenCL and PyCUDA give fairly direct access to the GPU and, as you might expect, perform more or less the same at around 15 times faster than a sequential C program. If you want to use the GPU and retain a higher level approach then you can use Numba Guvectorize which only requires you to put target='parallel' into your code. This performs about 3.5 times faster than a sequential C program. The full table of results is:

So there you have it. Pure Python is slow compared to simple C and to make it faster you need to compile it. If you want to go faster than C then you need to move from the CPU to the GPU and this can be done using using a high-level approach. This is very much an overview of the results and procedures so go and take a look at the full blog post for the rest of the information. More InformationHow To Quickly Compute The Mandelbrot Set In Python Related ArticlesLet HERBIE Make Your Floating Point Better

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

||||||||||||||||||||||||||||||||||||||||||||||||

| Last Updated ( Tuesday, 09 February 2016 ) |