| Apache Spark 2.0 Technical Preview |

| Written by Kay Ewbank |

| Tuesday, 07 June 2016 |

|

A new version of Apache Spark is now available in technical preview. Apache Spark is an open source data processing engine, and the final Spark 2.0 release is a few weeks away, according to the developers. One feature added in the new version is standard SQL Support. There's a new ANSI SQL parser and support for subqueries. Spark 2.0 can run all the 99 TPC-DS queries, which require many of the SQL:2003 features. This will make it much easier to port apps that use SQL to Spark.

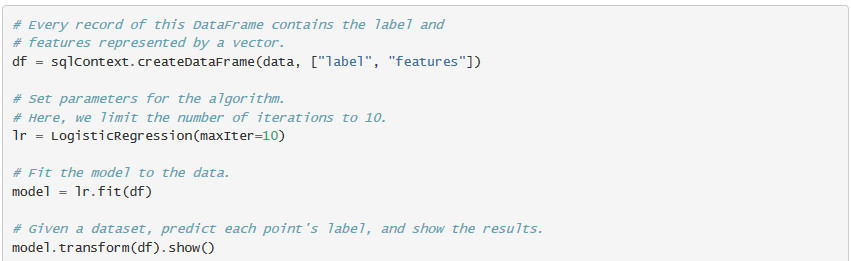

The APIs in Spark 2.0 have been streamlined to make Spark easier to use. DataFrames and Datasets have been unified for Scala and Java. The unification isn't applicable for Python and R as compile-time type safety isn't a feature for those languages. Where the unification does apply, DataFrame is now a type alias for Dataset of Row. The SparkSession API has been reworked with a new entry point that replaces SQLContext and HiveContext, though the old SQLContext and HiveContext have been kept for backward compatibility. The Accumulator API has also been redesigned with a simpler type hierarchy and support specialization for primitive types. Machine learning support has been improved with a spark.ml package, with its “pipeline” APIs, being put forward as the primary machine learning API. While the original spark.mllib package is preserved, future development will focus on the DataFrame-based API. Pipleline persistence has been added, so users can save and load machine learning pipelines and models across all programming languages supported by Spark. MLlib is Spark's scalable machine learning library. It fits into Spark's APIs and interoperates with NumPy in Python (starting in Spark 0.9). You can use any Hadoop data source (e.g. HDFS, HBase, or local files), making it easy to plug into Hadoop workflows. The sample code below shows Python code for prediction with logistic regression using MLib.

R support is another area to be improved, with support for Generalized Linear Models (GLM), Naive Bayes, Survival Regression, and K-Means in R. Another improvement is a Structured Streaming APIs that takes a novel way to approach streaming. This is an initial version of the Structured Streaming API that is an extension to the DataFrame/Dataset API. Key features will include support for event-time based processing, out-of-order/delayed data, sessionization and tight integration with non-streaming data sources and sinks. The preview is available either from Apache Spark or on Databricks, where the Databricks team has been contributing to Spark over recent months.

More InformationRelated Articles

To be informed about new articles on I Programmer, sign up for our weekly newsletter,subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Tuesday, 07 June 2016 ) |