| PlaNet Good At Photo GeoLocation |

| Written by David Conrad | |||

| Wednesday, 02 March 2016 | |||

|

Is it possible to train a system to determine the location where a photo was taken without any additional input? A team of Google researchers has tackled this problem and come up with a solution that outperforms human experts. The model, called PlaNet, performs photo geolocation with deep learning. It is the work of a team led by Tobias Weyand, a computer vision specialist at Google. According to the Abstract of the paper outlining the project: In computer vision, the photo geolocation problem is usually approached using image retrieval methods. In contrast, we pose the problem as one of classification by subdividing the surface of the earth into thousands of multiscale geographic cells, and train a deep network using millions of geotagged images.

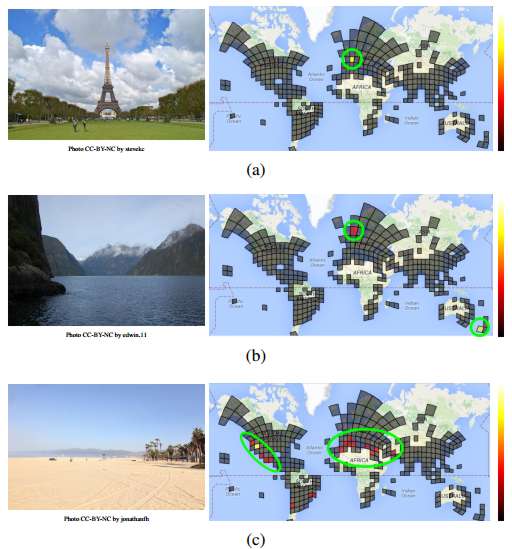

When presented with a photograph PlaNet outputs a probability distribution over the map where the scale runs from dark to light going through red as intermediate values. Notice that the grid doesn't cover the entire map. Areas like central Africa and China where the are few tagged photos available are left out - as are much of Canada and Australia. Here is the explanation of the results of the above selection of three photos. While the Eiffel Tower (a) is confidently assigned to Paris, the model believes that the fjord photo (b) could have been taken in either New Zealand or Norway. For the beach photo (c), PlaNet assigns the highest probability to southern California (correct), but some probability mass is also assigned to places with similar beaches, like Mexico and the Mediterranean. PlaNet was supplied with 2.3 million geotagged images from Flickr to see whether it could correctly determine their location with the result that was able to localize 3.6 percent of the images at street-level accuracy and 10.1 percent at city-level accuracy. It determines the country of origin in a further 28.4 percent of the photos and the continent in 48.0 percent of them. The researchers came up with a novel way of comparing the model's performance with that of humans. It used GeoGuesser, an online game that presents the player with a random street view panorama (sampled from all Google street view panoramas across the world) and asks them to place a marker on a map at the location the panorama was captured. This is a difficult task given the the majority of panoramas are in rural areas containing little or no geographical clues. Once you make a guess, GeoGuesser reveals where the photograph was taken and awards points based on the distance between the guess and the true location.

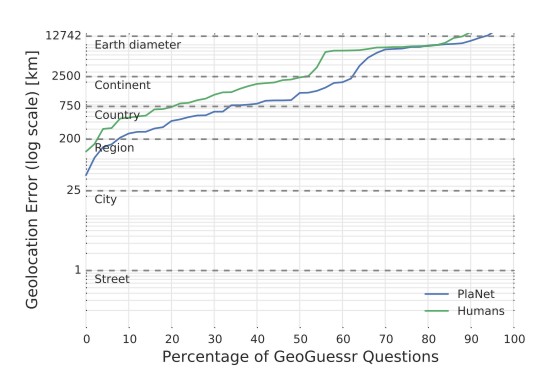

In Challenge mode two players are shown the same set of 5 panoramas and PlaNet played against 10 human subjects with a different set of photos each time. PlaNet won 28 of the 50 rounds with a median localization error of 1131.7 km compared to 2320.75 km for the human players. The percentage of panoramas localized within which distance by humans and PlaNet respectively is shown in this chart, which reveals that PlaNet localized 17 panoramas at country granularity (750 km) while humans only localized 11 panoramas within this radius.

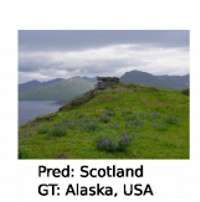

The researchers comment: We think PlaNet has an advantage over humans because it has seen many more places than any human can ever visit and has learned subtle cues of different scenes that are even hard for a well-traveled human to distinguish. Being a neural network when PlaNet gets it wrong sometimes, the fact it is a neural network means that it can be expected to improve in the future.

The researchers have also extended PlaNet to use information from sequences of photographs contained in photo albums that enable it to reach 50% higher performance than the single-image model. Weyand and his colleagues seem to be well on the way to providing a useful solution to the problem of geolocation on the basis of photographs. This is a much sought-after capability. Back in 2011 we reported that IARPA, US Intelligence Advanced Research Projects Activity, was looking for help from developers for Finder, software that could tell you where a photograph was taken. If successful, the Finder system will deliver a rigorously-tested technology solution for image and video geolocation tasks in any outdoor terrestrial location. The following year we followed up with IARPA Awards $15.6 Million To Find Where A Photo Was Taken, noting that phase one of project was estimated to be completed in 2014. The project still seems to be ongoing - but perhaps as counter terrorism is the objective it may be that we won't get to hear about its successful completion. Google meanwhile is managing to do a great deal with photos. We recently reported how computational photography is amassing photos from all round the world taken by different people at different times and putting them together to make time-lapse videos of popular locations. Once you put together the ever increasing supply of geotagged images that are publicly available with the classification abilities provided by deep learning there will soon be no hiding place. More Informationarxiv.org/abs/1602.05314 : PlaNet—Photo Geolocation with Convolutional Neural Networks by Tobias Weyand, Ilya Kostrikov, James Philbin Related ArticlesIARPA Awards $15.6 Million To Find Where A Photo Was Taken Time-Lapse Videos From Internet Photos

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 02 March 2016 ) |