| Perfect Pictures In Almost Zero Light |

| Written by David Conrad | |||

| Sunday, 13 May 2018 | |||

|

Take a camera and a neural network, suitably trained, and you can take photos in almost zero light that look as though the sun was shining. I'm almost tired of saying what amazing things computational photography is doing to change the way we make images, but this is some sort of breakthrough. If you know your camera technology, you will know that taking images in full sun is child's play but as soon as the light gets a little bit restricted then you have to engage in a juggling act with exposure time v aperture v film speed. The longer the exposure time then the more light captured, but things move and so blurring is a real problem. If you increase the aperture then you capture more light but reduce the depth of focus and so blurring is a real problem. If you increase the film speed, or today more accurately sensor sensitivity, then you respond more to the available light, but the noise increases leading to a blurry image. Sometimes these things work to your advantage and can be used creatively - long exposures remove fast moving objects or blur them into a flow, large apertures produce a blurry background and high film speeds produce a traditionally granny gritty appearance. Interestingly today's sensors don't work like this; they just increase the amount of "salt and pepper" noise in the image which is nearly always bad.

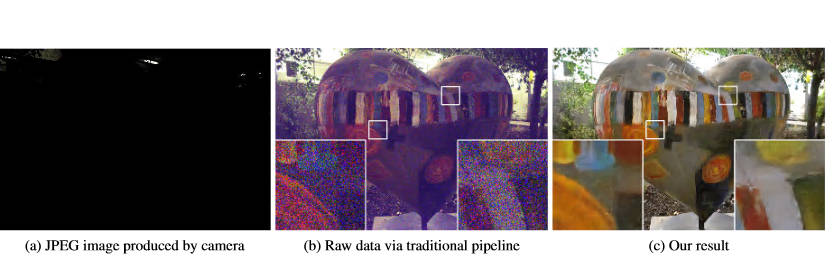

There are ways to improve images using standard and very clever photo filtering and manipulation, but what about taking a neural network and teaching it what a low light photo should look like in reality? This is what Chen Chen, Qifeng Chen, Jia Xu and Vladlen Koltun working at Intel Lab and the University of Illinois Urbana-Champaign have done. They took a very low light scene, 0.1 lux, and a camera with an exposure of 1/30s at f5.6 using an ISO 8000 sensitivity and ended up with what look like a blackness. By assembling a library of similar photos plus some long-exposure more normal-looking versions, they trained a neural network to convert the black photos into something the looked as if it had been taken in ordinary light levels. The library is a significant resource in its own right and is available to others to use to see if it is possible to do better.

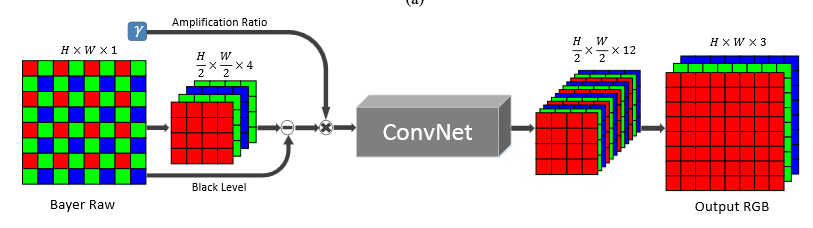

The technique works with raw sensor data and at the moment it is sensor specific, so the model works best with the camera it is trained on. Take a look at the video to see how well it works:

There is no doubt that it works, but how does it work? The neural network must be learning how low light changes the way the sensor captures data. It must be performing histogram scaling to spread the small range of brightness values across the full available range, but it must also be taking into account the way colors change a low light and the way shapes and light interact. What could it be used for? At the moment the processing time is too slow for realtime, but you can imagine a mobile phone camera capable of taking good quality photos in what otherwise would be pitch black. However, if you start to imagine more serious applications then some questions need answering. For example, if you used this approach to create night vision goggles for surveillance or battle there is the small problem of artifacts. How can we be certain the the reconstruction is correct? Suppose the neural network "hallucinates" something or fails to include something. This is not just a problem with this particular work, the difficulties of validating neural networks is a general one. The authors have suggestions for further work and one of them is to include High Dynamic Range rendering - so not only do you start out with a black image you end up with something that exceeds what you would have achieved in normal light with a standard camera. The code and all the data are available on GitHub so you can try it out for yourself.

Left - best conventional; right - neural network More Informationhttps://arxiv.org/abs/1805.01934 Related ArticlesUsing Super Seeing To See Photosynthesis Automatic High Dynamic Range (HDR) Photography To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Sunday, 13 May 2018 ) |