| Seeing Buildings Shake With Software |

| Written by David Conrad | |||

| Sunday, 26 April 2015 | |||

|

Using motion magnification, monitoring large structures could become cheap enough to be routine. All you need is a video camera and you can literally see rigid buildings move like reeds in the wind. Motion and color magnification using software is something that has been going on for a while. It first came to public attention back in 2005 at Siggraph. This made use of direct tracking of changes in pixels and then applied the detected movement to the image on a larger scale. In 2012 a team from MIT CSAIL discovered that you could get motion magnification by applying filtering algorithms to the color changes of individual pixels. The method didn't track movement directly, but instead used the color changes that result from the movement. With this technique the team could do some amazing things that depended on the direct observation of color change - like see the pulse in a wrist or the blood pulsing in a face. The important, and almost accidental, discovery was that in magnifying color changes they also magnified motion. The new method is fast enough to work in real time.

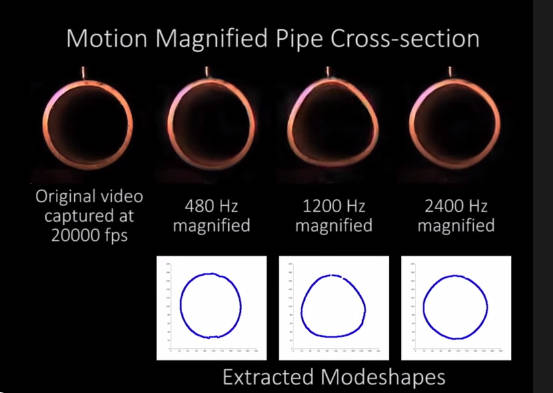

Now another MIT team, led by Oral Buyukozturk, has attempted to put the technique to use in monitoring structures - to directly see the vibrations in buildings, bridges and other constructions. Currently such monitoring involves instrumenting the building with accelerometers. This is expensive and doesn't generally give a complete "picture" of what is happening to the building. It would be much simpler to point a video camera at the building and use motion magnification software to really see the vibrations. The problem is that you can't be sure that what the software is extracting from the video is indeed the motion of the object and how it relates to the data you would get from the standard method using accelerometers. To confirm and calibrate the approach, the team used it to video a PVC pipe struck with a hammer and also measured the outcome using accelerometers and laser vibrometers. The motion magnified video revealed the pipe vibrating in the expected normal modes. You can see how well it worked in the following video:

This is a technique that clearly has other potential uses and the good news is that the original software, not the improved version used here, is available on GitHub with an open source license.

More InformationDevelopments with Motion Magnification for Structural Modal Identification through Camera Video (pdf) Related ArticlesSee Invisible Motion, Hear Silent Sounds Cool? Creepy? Extracting Audio By Watching A Potato Chip Packet Computer Model Explains High Blood Pressure Super Seeing Software Ready To Download Super Seeing - Eulerian Magnification

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Sunday, 26 April 2015 ) |