| Extracting Audio By Watching A Potato Chip Packet |

| Written by Harry Fairhead | |||

| Wednesday, 06 August 2014 | |||

|

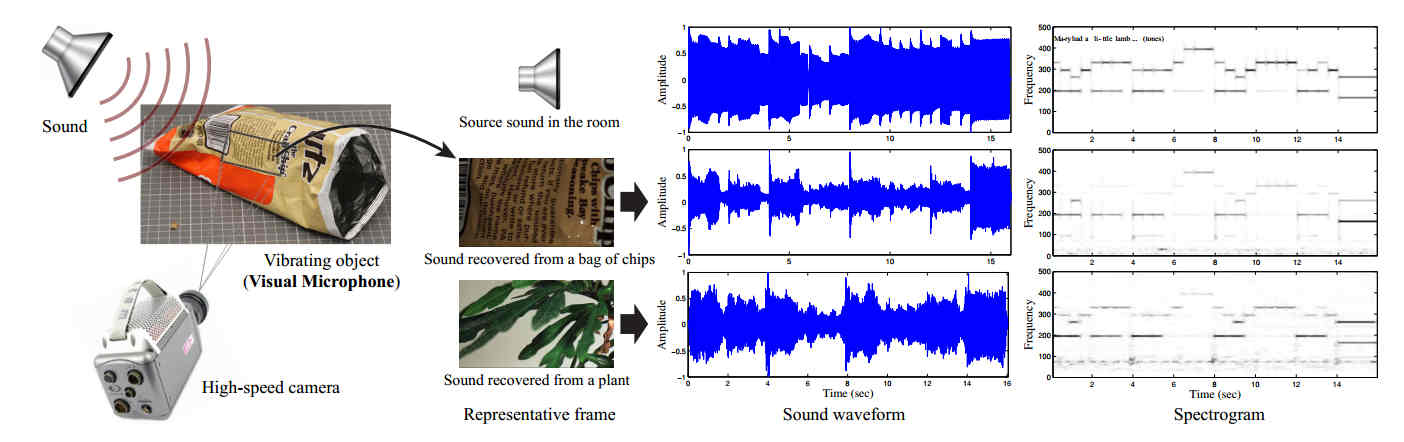

This particular breakthrough sounds like a hoax but given the pedigree of the researchers involved - MIT, Microsoft and Adobe - we'd better take it seriously. Taken seriously it has a certain wow factor that makes you think that with the right algorithm anything is possible., You are probably used to all the silly things that computers do in films and TV shows, but this is like something from a bad CSI show that happens to be real. The researchers have used a video camera to recover sounds from the way objects move in response to sound. This seems like a straightforward technical task until you realize that this isn't a specially prepared object, rather but the demo involved a packet of potato chips behind sound proof glass at fifteen feet. Other successes involved aluminium foil, the surface of a glass of water, and the leaves of a potted plant. Of course, the physical principles involved are fairly obvious and well known. Sound is just vibrations in the air and the vibrations cause objects in the same room to vibrate. The difficulty is that these object vibrations are very small and relating them to the original sound is complex. There is also the small fact that to pick up sound frequencies you need to have a video camera with a high frame rate - 2,000 to 6,000 frames per second is required. Most computer video cameras manage about 60 frames per second. Even so, and this is very clever, you can get information about movement from standard 60 frame per second video camera. The trick is to look for slight distortions in the edges of objects as the frame scan proceeds. As light sensors are read out a row at a time you can infer movements faster than the frame rate by looking for shifts in an edge position between each row of pixels.

The technique also makes use of the small changes in the color of a pixel on the edge of an object. If the object is red and the background yellow then as the edge moves to cover more or less of the pixel the color it senses changes from red to orange and then to yellow. Overall, however, it seems that the key technique in the process is the Eulerian seeing algorithm we described some months ago. This filters and amplifies changes in a visual scene. Its first application was to pick out the pulse in the veins or by the color change in the face of the user. The algorithm with some specialization is used to detect the motion of the edges of an object and from this the sound that caused it to vibrate is inferred. To see this in action see the following video created by the researchers:

The sound recovered by the standard camera isn't as good but its still impressive. The paper is to be presented at this year's ACM SIGGRAPH meeting. As one of the researchers, Alexei Efros from the University of California at Berkeley said: “We’re scientists, and sometimes we watch these movies, like James Bond, and we think, ‘This is Hollywood theatrics. It’s not possible to do that. This is ridiculous.’ And suddenly, there you have it. This is totally out of some Hollywood thriller. You know that the killer has admitted his guilt because there’s surveillance footage of his potato chip bag vibrating.” What more is there to say? Especially if there is a potato chip bag around....

More InformationThe Visual Microphone: Passive Recovery of Sound from Video Related ArticlesSuper Seeing Software Ready To Download Super Seeing - Eulerian Magnification Halide - New Language For Image Processing Camera Fast Enough To Track Ping Pong Balls Computational Photography On A Chip

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 06 August 2014 ) |