| Any Mobile Camera Can Be A 3D Scanner |

| Written by David Conrad | |||

| Tuesday, 10 December 2013 | |||

|

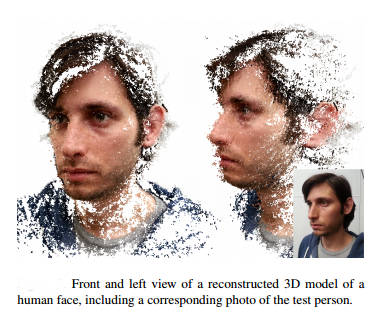

Take a standard mobile phone with camera, add some software and take a 3D scan of any object. It is that easy and you don't need extra hardware - a problem for the Kinect? A team at ETH Zurich has managed to get the necessary optical processing code to work out a 3D reconstruction from a set of 2D photos. All the user has to do is move the phone around the object and the software works out when to take pictures. It knows where it is by using the accelerometers to calculate its position relative to the first picture taken. The user also gets feedback on what parts of the object have been covered and can add views to, for example, see behind the object. Such computations previously needed a big computer, but now with optimization and the help of a GPU they can be done on a modest mobile phone. As no server is involved in the processing, the phone can be used to capture a 3D model even when not connected to the Internet.

Although the ability to scan an object using a simple phone is a great tool, it isn't going to push out 3D cameras like the Kinect. Depth cameras provide a real time flow of depth information that can be used by robots and game players. The usefulness of this sort of software is in capturing isolated 3D models. The examples given in the video, i.e. scanning museum exhibits, sound great, but it isn't clear how well this would go down with curators who normally ban any sort of photography. Even so you can believe that there are reasons for wanting the app.

What is more interesting is to speculate on where this single-sensor multiple view 3D reconstruction could go in the future. The reason that 3D cameras are useful in vision tasks is that the images they produce have far more redundancy. In a color image two close pixels of the same color aren't necessarily part of the same 3D object, but two close pixels at the same depth in a depth image are very likely to be part of the same 3D object. This makes implementing computer vision algorithms much easier. If you can extract 3D depth data from a single sensor moving to different points of view you can use the same depth algorithms to implement computer vision. It could be the breakthrough in computer vision we are looking for. The big problem is that the ETH Zurich press release ends with the chilling line: The patent pending technology was developed exclusively by ETH Zurich and can run on a wide range of current smartphones.

More InformationYour smartphone as a 3D scanner Live Metric 3D Reconstruction on Mobile Phones pdf Related ArticlesMegastereo - Panoramas With Depth Google - We Have Ways Of Making You Smile Computational Photography On A Chip Super Seeing Software Ready To Download Blink If You Don't Want To Miss it Better 3D Meshes Using The Nash Embedding Theorem Light field camera - shoot first, focus later

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Monday, 09 December 2013 ) |