| AI Creates Breakthrough Realistic Animation |

| Written by David Conrad | |||

| Sunday, 03 June 2018 | |||

|

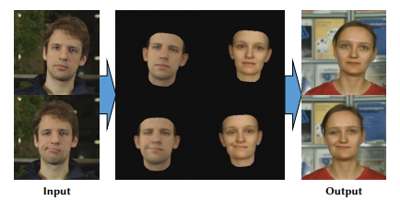

Given how deep learning is cropping everywhere it was only a matter of time before it would be applied to facial reenactment. This will have a big impact in animation and computer graphics. Researchers from the Max Planck Institute for Informatics, the Technical University of Munich, the University of Bath, Stanford University and Technicolor, have developed the first deep learning based system that can transfer the full 3D head position, facial expression and eye gaze from a source actor to a target actor. Their work builds on the Face2Face project we reported on in 2016 that was the first to put words into another person's mouth and an expression onto their face. As the team explains in a research paper prepared for SIGGRAPH 2018, to be held in Vancouver in August: “Synthesizing and editing video portraits, i.e. videos framed to show a person’s head and upper body, is an important problem in computer graphics, with applications in video editing and movie post-production, visual effects, visual dubbing, virtual reality, and telepresence, among others." Their new approach is based on a novel rendering-to-video translation network that converts a sequence of simple computer graphics renderings into photo-realistic and temporally-coherent video. It is the first to transfer head pose and orientation, face expression, and eye gaze from a source actor to a target actor. This mapping is learned based on a novel space-time conditioning volume formulation. Using NVIDIA TITAN Xp GPUs the team trained their generative neural network for ten hours on public domain clips and the results can be seen in this video:

The researchers conclude: We have shown through experiments and a user study that our method outperforms prior work in quality and expands over their possibilities. It thus opens up a new level of capabilities in many applications, like video reenactment for virtual reality and telepresence, interactive video editing, and visual dubbing. We see our approach as a step towards highly realistic synthesis of full-frame video content under control of meaningful parameters. We hope that it will inspire future research in this very challenging field. More InformationH. Kim, P. Garrido , A. Tewari, W. Xu, J. Thies, M. Nießner, P. Pérez, C. Richardt, Michael Zollhöfer, C. Theobalt, Deep Video Portraits, ACM Transactions on Graphics (SIGGRAPH 2018) Related ArticlesCreate Your Favourite Actor From Nothing But Photos Watching Paint Dry - GPU Paint Brush Better 3D Meshes Using The Nash Embedding Theorem 3-Sweep - 3D Models From Photos Time-Lapse Videos From Internet Photos

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Sunday, 03 June 2018 ) |