| Breakthrough! Faster Matrix Multiply |

| Written by Mike James | |||

| Friday, 09 December 2011 | |||

|

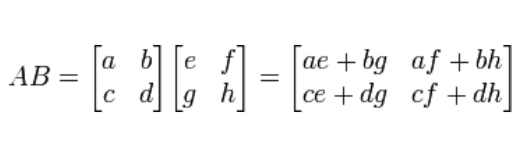

Multiplying matrices together is a fundamental algorithm. It is used in many mathematical procedures and by finding a way to do it faster you save hours of computation and reduce the need for supercomputers. The actual algorithm for multiplying two matrices together is remarkably simple, but it involves three nested for loops and so it takes of the order of n3 multiplications to multiply two n x n matrices together.

The algorithm is so simple that you might think that there is no scope for a general reduction in this amount of work - it clearly needs three loops of order n in general. However, it has long been conjectured that matrix multiplication might be possible in very nearly quadratic time. That is you might be able to multiply two matrices in time proportional nω with ω<3. The first such algorithm was invented in 1969 by Volker Strassen. He found a way to multiply 2x2 matrices using just 7 rather than 8 multiplications - the trade off was that you had to do more additions. You can perform the multiplication of matrices of any size by recursively multiplying 2x2 blocks and so this gives rise to a general algorithm that takes O(nω) with ω=log27=2.807. This algorithm isn't numerically stable but it is used in some number crunching packages to speed things up for high dimensional matrices. The next breakthrough was in 1990, when Coppersmith and Windograd managed to find an algorithm that reduced ω to 2.376. And this is where the story comes to a halt, as there have been no improvements for the past 20 plus years. Now Virginia Vassilevska Williams at UC Berkeley and Stanford University has found an algorithm that reduces ω to 2.373, which at 0.003 isn't much of a reduction but it proves that even after 20 years of no progress the bound can be pushed down. The principle employed was to apply the Coppersmith and Windograd method more than twice. This was thought of before, but applying the method three times doesn't produce any improvement - you have to apply it an amazing eight times before you get a better result. The idea was apparently originally presented as part of a PhD thesis by Andrew Stothers, but it went unnoticed. It wasn't until Virginia Vassilevska Williams provided a general methodology and rediscovered the idea that its importance become clear. So as 2011 ends we can claim that the Coppersmith and Windograd barrier has been broken. How much lower can ω go? Who knows, but the bad news is that for any matrix of practical size the algorithm is so complex that it simply isn't worth using. This really does seem to have reached the point where it has become a theoretical quest.

More informationBreaking the Coppersmith-Winograd barrier

To be informed about new articles on I Programmer, subscribe to the RSS feed, follow us on Google+, Twitter or Facebook or sign up for our weekly newsletter.

|

|||

| Last Updated ( Saturday, 10 December 2011 ) |