| Neural Networks for Storytelling |

| Written by Nikos Vaggalis | |||

| Sunday, 12 June 2016 | |||

|

A paper authored by a large team of Microsoft Researchers past and present, to be presented this week at the 15th Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL 2016), introduces Microsoft Sequential Image Narrative Dataset (SIND) and how it can be used for visual storytelling. Visual story telling is a standard preschooler activity found in any curriculum. Kids are presented with a number of small paper frames, each one containing a piece of the story, that they have to put correctly together from start to finish for revealing the hidden story, a story with a beginning, middle and end. This is not rudimentary, however. The ability to narrate is a multidisciplinary skill acquired at the very early stages, and for the toddlers to able to describe an event they have to first be able to process a number of aspects such as context, chronological ordering, establishing characters and location. As the years pass and experience is acquired, our story telling capabilities evolve so we can leverage greater, richer and versatile descriptions, such that storytelling has been described as perhaps the most powerful way that human beings organize experience" Given our current concern to find ways for artificial intelligence to replicate human abilities, in many occasions even surpass them, visual storytelling is another human activity coming under scrutiny. Can a neural network be rendered capable of making sense out of a sequence of pictures and string them together to tell a meaningful story? Classifying and categorizing images, as well as recognizing the physical objects contained within, is a property that is already well comprehended by neural networks, and has been employed in many practical applications such as LaMem, explored in Are your pictures memorable? We have also already met the idea of a computer describing what it sees in a photo with Caption.bot, another interactive app that you can try out online, also from the Microsoft Cognition Group. It is this group which is responsible for the Seeing AI app, unveiled at Build 2016 by Satya Nadella, which helps the visually impaired to know what is going on around them. Part Seeing AI does is to provide real-time captions to what is happening in the environment..Visual storytelling goes another step towards what humans do by understanding context. The problem that classification alone has been unable to address is establishing context and going from the concrete and isolated (like a tree), to the abstract and general, (like a forest), to get the bigger picture. Now Microsoft Research thinks that it has the answer to the million dollar question, with SIND, Microsoft Sequential Image Narrative Dataset, which aims to: "move artificial intelligence from basic understandings of typical visual scenes towards more and more human-like understanding of grounded event structure and subjective expression" The dataset it worked on came from albums with images publicly available on Flickr, which were processed under a two-stage crowd-sourcing workflow using Amazon's Mechanical Turk.. In the first, the crowd worker was asked to select a subset of photos from a given album to form a photo sequence and write a story about it. In the second stage another worker was asked to re-tell the story, writing a story based on one photo sequence generated by workers in the first stage. Then the dataset was processed in three stages and three groups:

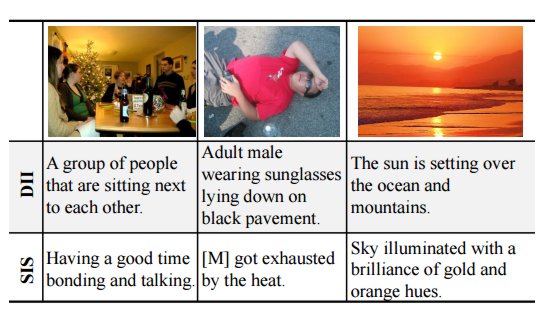

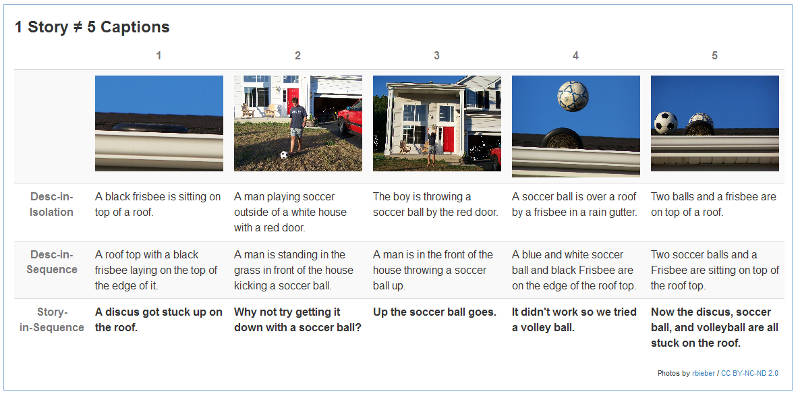

incrementally moving from just considering the content of a single image (DII), through a set of images with a temporal context, to stories which use richer narrative language rather than simple descriptions of a scene (SIS): The captioned dataset was fed to the neural network, where the actual vision-to-language processing took place. The results of the processing, in other words the quality of stories that the AI generated, were checked by humans as human judgment is still the most reliable way, given the complexity of the nature of the storytelling task. Despite research still being in the early stages, with the AI's network intelligence quality resembling that of a little child, the first results looked promising and established several strong baselines. Samples of the work can be found on the project's web page, where we find an example of striking accuracy; that of trying to shake a discus off the roof of the house using a soccer ball. This is what the AI came up with : "A discus got stuck up on the roof. Why not try getting it down with a soccer ball? Up the soccer ball goes. It didn't work so we tried a volley ball. Now the discus, soccer ball, and volleyball are all stuck on the roof."

Unfortunately, unlike LaMem or Caption.bot whose websites allow you to upload pictures and get live feedback with the results of the processing, the SIND project website is not yet equipped with that capability. It's a shame because I'd like to see it how it performs against Flickr albums such as Humpty Dumpty and Homeless. Could AI capture the context of homelessness? Would it understand that the person's possessions are that only chair? Would it understand that the homeless man first paid for his food, then ate it and then tried to catch a nap? Even if there are limitations to AI visual storytelling capabilities, there are plenty of practical applications of this technology. The idea of helping those with visual impairment has already been demonstrated with the Seeing AI project, which uses the Microsoft Vision and natural language APIs, which are just two of the APIs (formerly called Project Oxford) which are available to use on GitHub. There is obvious scope for its use in social media and it could be tweaked to have a role in interpreting Big Data. It is also possible to imagine its applicability in policing and surveillance applications where a series of pictures taken from various surveillance cameras are fed into the system, and the system orders and combines them to present the crime or the activities of the person monitored in natural language for the court or investigation. But perhaps that is too futuristic a vision.

|

Action Figure Craze Overruns OpenAI 13/04/2025 If you're on social media, you'll probably have seen a lot of 'action figure' posts, where people show off images of themselves, their dog or their cat in the form of an action figure, complete with s [ ... ] |

Django 5.2 Adds Composite Key Support 07/04/2025 Django 5.2 has been released with the addition of support for composite primary keys, and the automatic importing of all models in the shell by default. |

More News

|

Comments

or email your comment to: comments@i-programmer.info

More Information

More Information