| OpenAI Gym Gives Reinforcement Learning A Work Out |

| Written by Mike James | |||

| Friday, 29 April 2016 | |||

|

When OpenAI, an open source AI initiative backed by Elon Musk, Sam Altman and Ilya Sutskever, was announced earlier in the year, I doubt anyone expected anything to be produced so quickly and certainly not something connected with reinforcement learning. OpenAI Gym is what it sounds like - an exercise facility for reinforcement learning.

Since the success of Deep Mind's Deep Q learning at playing games, and Go in particular, the subject of reinforcement learning (RL) has gone from an academic backwater to front line AI. The big problem is that reinforcement learning is a difficult technique to characterise. Put simply an RL system learns not by being told how close it is the the desired result, but by receiving rewards based on its behaviour. Of course this is largely how we learn and if it can be made to work efficiently it promises us not just effective AI but new knowledge. For example AlphaGo taught itself to play Go and in the process discovered for itself approaches to Go that humans had ignored. OpenAI claims that the things are holding RL back:

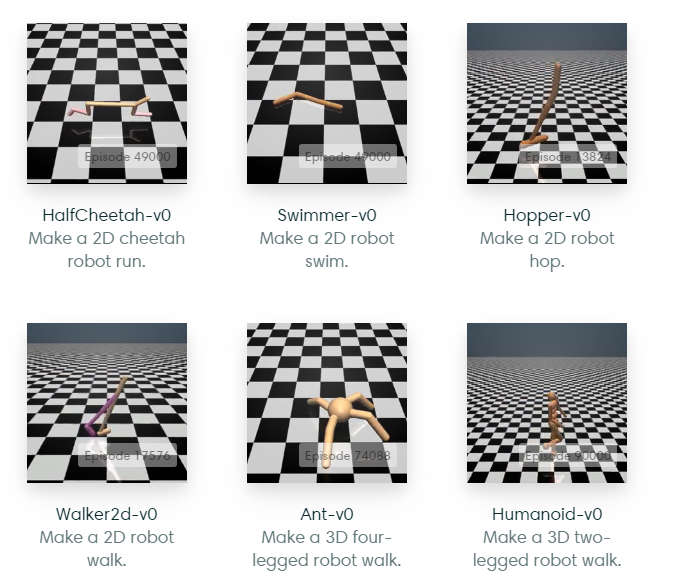

The motivation behind OpenAI Gym is to provide a set of environments that different RL programs can be tested in. These are:

At the moment you can connect your RL system to the gym using Python. Of course it is up to map the RL system onto the environment - as the documentation says: "We provide the environment; you provide the algorithm. You can write your agent using your existing numerical computation library, such as TensorFlow or Theano." The idea is to collect and curate a set of results that indicate how well the different approaches are doing at generalizing their results. It is good to see that an open source initiative is doing something other than simply reproducing what is being done in the closed software world. It would be very easy for OpenAI to simply build its own Tensorflow or an alternative, but OpenAI Gym is novel and needed.

More InformationRelated ArticlesAI Goes Open Source To The Tune Of $1 Billion GNU Gneural Network - Do We Need Another Open Source DNN? Google's DeepMind Demis Hassabis Gives The Strachey Lecture AlphaGo Beats Lee Sedol Final Score 4-1 Why AlphaGo Changes Everything Google's DeepMind Learns To Play Arcade Games Microsoft Wins ImageNet Using Extremely Deep Neural Networks The Flaw In Every Neural Network Just Got A Little Worse

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 12 July 2023 ) |