| Google's AI Beats Human Professional Player At Go |

| Written by Mike James | |||

| Wednesday, 27 January 2016 | |||

|

Go has long been a troublesome game for AI. It was the game in which humans clung onto supremacy - until now. A neural network based system has finally beaten a really good human player.

The new system achieved a 99.8% winning rate against other Go programs and defeated the (human) European Go champion by five games to zero. Of course, this doesn't make it provably the best Go player in the world, but you can tell that something big has just happened. Computers can now beat us at Go! The architecture of the network, called AlphaGo and designed by Google's Deep Mind team, is novel in that it uses two distinct neural networks in a traditional reinforcement learning "actor-critic" arrangement. The first network picks possible moves and the second evaluates them in terms of how much advantage the move gives in the future. This approach allowed AlphaGo to learn from 30 million human games of Go and then go on to refine its performance by playing millions of games against itself.

You can see how the game and AlphaGo works in the following video:

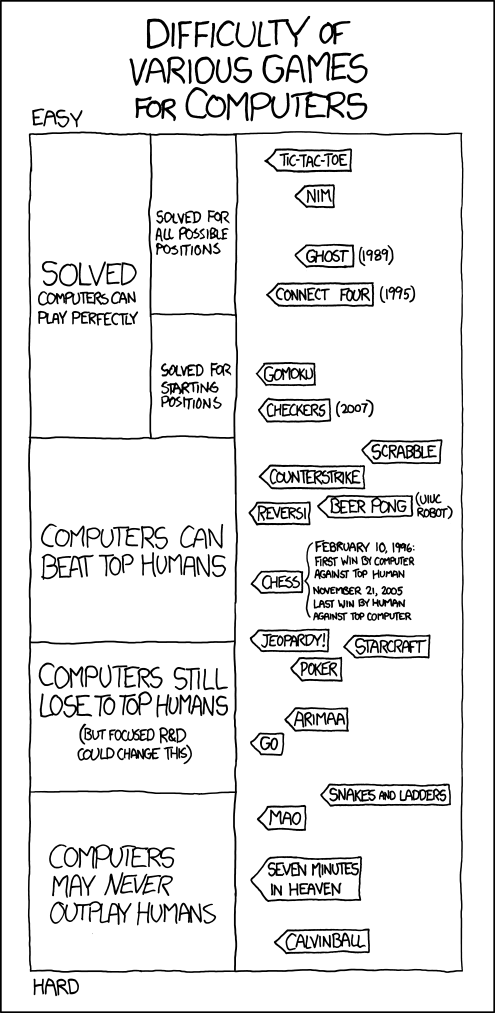

This is a more "human" approach to playing games. The standard AI approach to playing games is based on deep search to evaluate positions. Essentially chess playing programs win because they have the computational power to play the game into the future and evaluate the next move in terms of what will subsequently happen. AlphaGo makes more use of the intelligent selection of moves, which it then evaluates. This seems to be what is needed to enable a machine to win at Go. One thing that is certain is that the well known and previously "accurate" xkcd cartoon will need to be updated:

More cartoon fun at xkcd a webcomic of romance,sarcasm, math, and language

There are plans to evaluate AlphaGo by playing against the world's best players so watch for more news soon. What is interesting is that Google's Deep Mind seems to have found a way of combining the ideas from reinforcement learning and neural networks.

Picture Credit: Goban1

More InformationMastering the game of Go with deep neural networks and tree search Related ArticlesGoogle's DeepMind Learns To Play Arcade Games Google Buys Unproven AI Company Deep Learning Researchers To Work For Google Google's Neural Networks See Even Better

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 27 January 2016 ) |