| Automatic Colorizing Photos With A Neural Net |

| Written by David Conrad | |||

| Saturday, 09 January 2016 | |||

|

The range of things that neural nets can do is well known to be amazing, but I bet you didn't think of this particular use. It is not only clever, it is of potential practical value. The world is full of old black-and-white photos and videos that the general public won;t spend the time looking at because they lack color. Hence there is a lot of interest in colorizing pictures and videos. In most cases this is done by hand with the help of whatever digital painting technology is available. There is even a Reddit group devoted to the topic, which shows some impressive results. Could a neural network be trained to do the job? At first thought is seems a bit unlikely. It is a very sophisticated task. The network would have to recognize an object - a face say - and its boundaries and assign a color to a typical face. However neural networks are good at recognizing things, so why not give it a try. This is exactly what Ryan Dahl has done with help of Google's TensorFlow. As an aside it seems that the release of TensorFlow really has stimulated the more general use and experimentation with neural networks. The first piece of good news is that training data is trivial to get hold of. Just take any color photo and desaturate it to get a greyscale version. You can use this to train the network with the original as the target. The actually neural network architecture used is fully described in a detailed blog post, but it starts with a trained image classifier and then learns the mapping from greyscale images to colored images. This is a suggested new model - a "residual decoder" which is similar to but more than an autoencoder which would map grey scale images to greyscale images. So does it work? The answer has to be "sort of". The big problem is that objects and colors aren't always even slightly correlated. Imagine that the network is presented with lots of images of hats - what color is a hat? Obviously hats are almost any color you care to think of and so the network will converge on a sort of sludge brown color which is a mix of all the possibilities. If the correlation between object and color is appreciable then the network will learn things like "most cars are black" and so on. This color correlation problem is the reason that the network has a tendency to produce sepia toned images - but the results are surprisingly good:

The first photo is the input image, the middle one is the output and the final photo is the correct color image. These are from the validation set and the neural network wasn't trained on them; hence it has never seen them before. Notice that the network does very well and where it goes wrong you can see why it does. For example, the network can't know the color of the lorry and why should it know the color of the green band around the lower edge of the cab? This is all the more impressive when you know that this was after just one day of training. There are lots more examples in the blog post for you to examine, including some where things didn't work out as well. So how did it do against human colorization? To be specific, against the Reddit group?

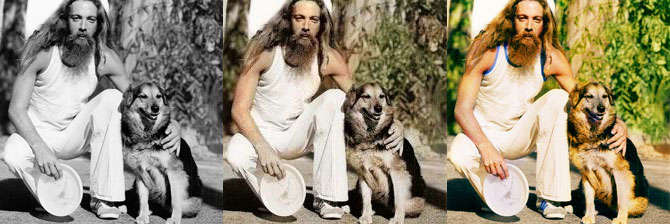

This time the image on the far right is the hand colored version. You can see that the network doesn't really do a job that is in the same league, but for such a simple system it is working better than you could expect. Notice that the sky isn't completely colored in the first example and the hands in the second have been missed. This sort of problem could be fixed with more training. There are other examples where things doen't go as well, but it is still impressive. It has some interesting quirks - it tends to color black animals brown and all grass is green. This is just the start of the project; there are lots of idea how to make it better. In particular, there is the extension to video. You could simply code each frame using the network, but clearly there is a relationship between frames that can be made use of - coloring should be coherent across frames. However, take a look a how good the results are if you use the naive method of coloring individual frames in isolation:

Not perfect but clearly there is a lot of potential in this technique. You can find more information on the blog and you can download the model in TensorFlow format.

More InformationRelated ArticlesTensorFlow - Googles Open Source AI And Computation Engine Microsoft Wins ImageNet Using Extremely Deep Neural Networks Baidu AI Team Caught Cheating - Banned For A Year From ImageNet Competition The Allen Institute's Semantic Scholar Removing Reflections And Obstructions From Photos See Invisible Motion, Hear Silent Sounds Cool? Creepy? Computational Camouflage Hides Things In Plain Sight Google Has Software To Make Cameras Worse

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Sunday, 10 January 2016 ) |