| Describe It And AI Will Draw It For You |

| Written by David Conrad |

| Sunday, 21 January 2018 |

|

Neural Networks do seem to be able to keep on impressing us with their ability to do different tasks better than we thought possible. The latest in a trio of networks from Microsoft has the ability to draw what you describe. Microsoft has been implementing some neural networks to see what they can do. The first automatically writes captions to pictures, the second answers questions about pictures, both fairly remarkable,and the final network completes the circle by drawing pictures of something you describe, and this takes us somewhere new. It also emphasises the fact that, while some people are starting to down play neural networks and deep learning in general, there is still a lot to get out of the method before we move on. In fact, it might even be showing us what we could move onto in that it uses more than one neural network in a more sophisticated design than a simple deep network would lead you to expect.

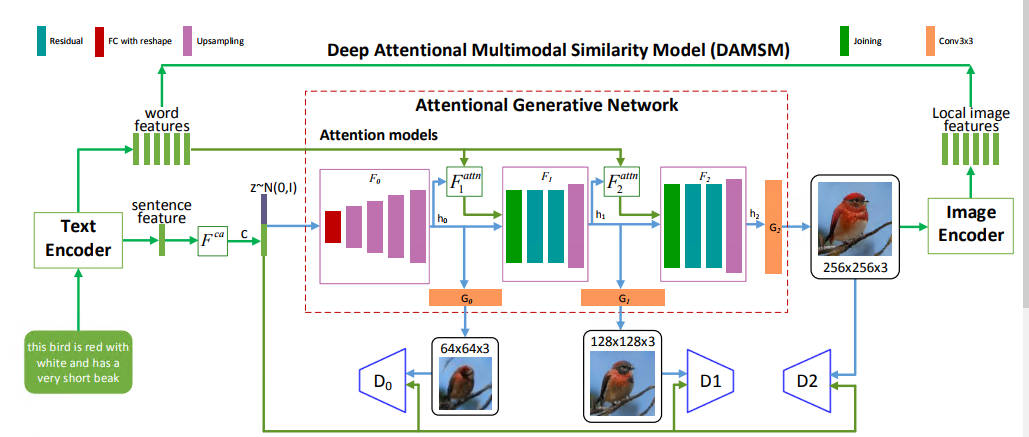

The design is called a Deep Attentional Multimodal Similarly Model, or DAMSM for short. Three neural networks generate images at increasing resolution. The whole network was trained on images with captions. The learning was implemented using a Generative Adversarial Network which generates an image and tries to pass it off to another network as an image which corresponds to the caption. This is fairly standard, but in this case each of the layers concentrates on a different aspect of the caption, it pays attention to different parts of the description, and slowly improves the image in stages until it is good enough to fool a human - some of the time at least.

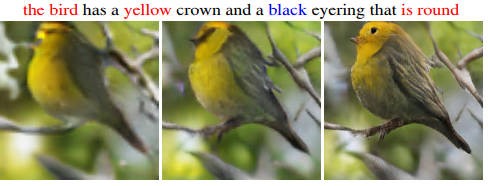

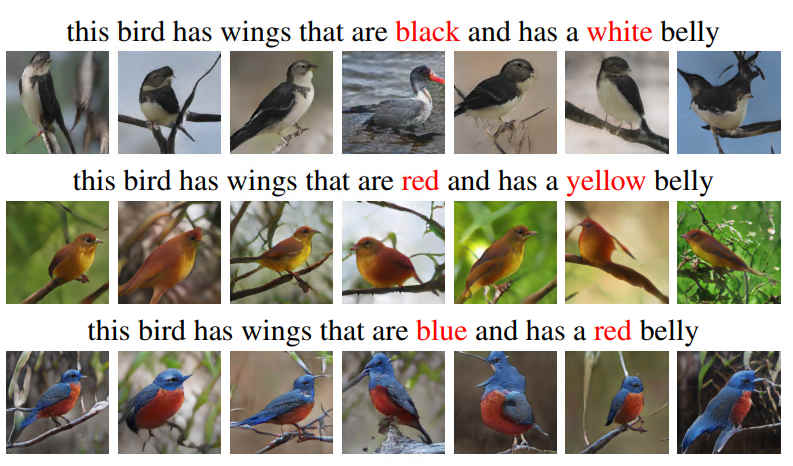

Just being able to recreate a picture of a bird after being shown pictures of birds and captions isn't particularly impressive. You could mostly solve the problem by remembering the input examples without any analysis of the structure of the data. What makes neural networks and systems built from multiple neural networks interesting is how they generalize and behave on inputs they have never been presented with. In this case the networks do seem to be picking up on the meaning of the individual words in the sentences rather than matching complete sentences to complete images:

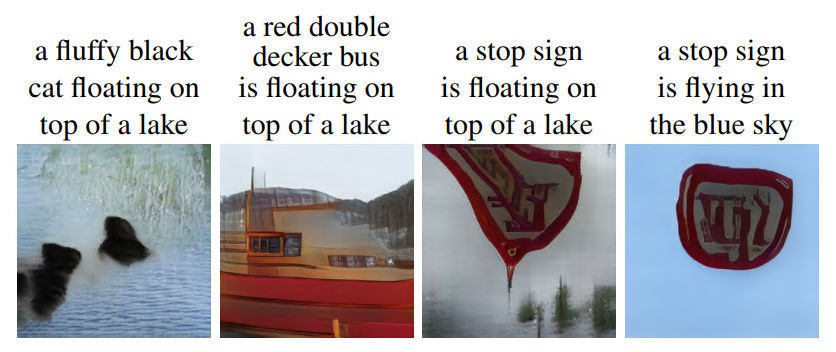

It is also reasonably impressive when fed descriptions that are strange and very much not part of the training set:

You can see that it sort of makes sense, if not perfect sense. Occasionally the network gets it very wrong and this proves that it hasn't entirely managed to model what a natural image is:

I don't know if there is a practical application waiting for this particular network, but it could be evolved into something more capable and then it could have implications. Imagine being able to describe the art work you want and have the network generate it for you? Applications? Lots.

More InformationAttnGAN: Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks Tao Xu, Pengchuan Zhang, Qiuyuan Huang, Han Zhang, Zhe Gan, Xiaolei Huang and Xiaodong He Related ArticlesA Neural Network Reads Your Mind AI Plays The Instrument From The Music Google's Computer Vision Box Just $45 Google Uses AI To Make Better Artists Google's AI Drawing Guessing Game Neural Networks Describe What They See To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Sunday, 14 June 2020 ) |