| Are Your Pictures Memorable? |

| Written by Nikos Vaggalis | |||

| Wednesday, 23 December 2015 | |||

|

Whenever you post a picture to the social media, you are eager to know how well it will be received and how many tweets or likes it will attract. Now, an algorithm called MemNet coming out of the MIT labs may reveal whether your picture will be forgotten in a snap or be remembered throughout time. This will subsequently make you better at finding the right picture that will stand out from the rest and be ideal for online consumption Fun use aside, this technology can have serious and widespread applications :

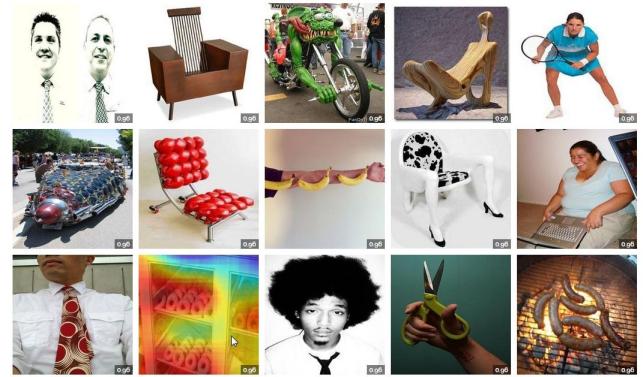

According to the official statement, MemNet is an algorithm that can: "objectively measure human memory, allowing us to build LaMem, the largest annotated image memorability dataset to date (containing 60,000 images from diverse sources). Using Convolutional Neural Networks (CNNs), we show that fine-tuned deep features outperform all other features by a large margin, reaching a rank correlation of 0.64, near human consistency (0.68). Analysis of the responses of the high-level CNN layers shows which objects and regions are positively, and negatively, correlated with memorability, allowing us to create memorability maps for each image and provide a concrete method to perform image memorability manipulation."

The real issue here is that although long term human visual memory can store a remarkable amount of visual information, it tends to degrade over time. Further, it is found that image memorability is a property of an image and not of the human brain and can be quantified using machine learning algorithms. In our every day lives we are bombarded with a massive amount of images that pose constraints on our memory, so the challenge here is how to aid human memory. Can we do it by making images more memorable thus allowing people to consume information more efficiently? But it can go both ways and the same algorithm can also work in reverse and identify the reasons of why some parts of an image tend to be forgotten rather than remembered! The algorithm has sprung into existence as part of the computational architectures for visual processing, and in particular that of convolutional neural networks, CNN. So the science behind it already existed, but for the machine to have any kind of success there is another very important ingredient to be taken into consideration - a large array of data. In this case the dataset consisted of 60,000 images, taken from a variety of sources such as the MIR Flickr, AVA, SUN, the image popularity dataset and more. Each image had been scored according to its memorability. The dataset did not only contain faces of people, being not only anthropocentric but also scene-centric and object-centric,meaning that the algorithm was able to work on images that also contain landscapes and other inanimate objects

Once you have a suitable dataset the next step is to teach the machine,beginning from the very basic principle of how to detect,classify and relate the objects that an image is composed from. This happens by annotating or adding meta-data to the pictures that guide the machine i.e notify it for the presence or absence of an object class in the image, e.g., This can be achieved using the Hybrid-CNN convolutional neural network used for classifying categories of objects and scenes, which was additionally tweaked for accommodating MemNet's needs as unlike visual classification, images that are memorable, or forgettable, do not even look alike: an elephant, a kitchen, an abstract painting, a face and a billboard can all share the same level of memorability, but no visual recognition algorithms would cluster these images together. The research used a crowd-sourcing architecture in that workers of the Amazon’s Mechanical Turk (AMT) platform would press a key when first encountering a picture and press it again when they encountered it again (that is, if they remembered seeing it in the first place). This acted as the indicator of the image's memorability. Then the data was annotated as the example described above, but with meta-data and attributes such as aesthetics, popularity and emotions, and was subsequently fed to MemNet, which managed to score an impressive 0.64 which nears the human consistency rank correlation for memorability of 0.68, demonstrating that predicting human cognitive abilities is within reach for the field of computer vision The correlation between these attributes and memorability lead to some very interesting observations such as:

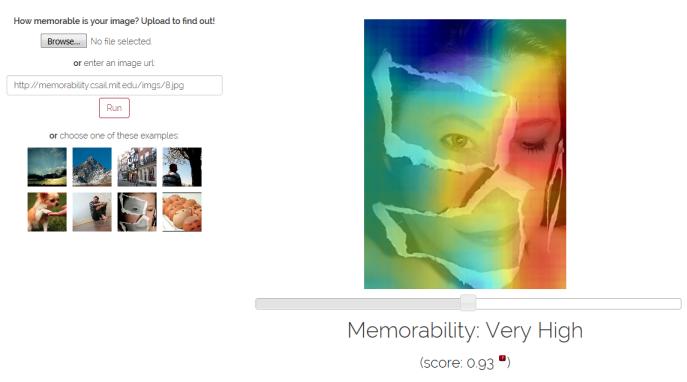

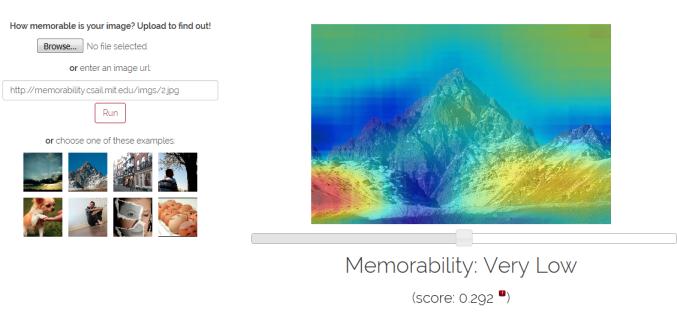

The learned representations were then visualized in a memorability heat map that portrays the significance of the objects that make an image memorable or forgettable. The areas with hotter colors denote memorability, the cooler colors denote forgetability. These maps could then be used in the learning and education field, for creating visual cues which reinforce the forgettable aspects of an image while also maintain the memorable ones.

If you want to try things out then visit the LaMem website, which contains samples of the algorithm's work and allows you to upload pictures and check out how they perform against the memorability scale.

More Information

Related ArticlesMicrosoft Wins ImageNet Using Extremely Deep Neural Networks Baidu AI Team Caught Cheating - Banned For A Year From ImageNet Competition The Allen Institute's Semantic Scholar

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Sunday, 03 November 2019 ) |