| The Flaw In Every Neural Network Just Got A Little Worse |

| Written by MIke James | |||

| Wednesday, 21 October 2015 | |||

|

Neural networks are performing tasks that make it look as if strong AI, that is machines that think like us, is very possible and perhaps even not that far away. But there is a problem. Recently it was discovered that all neural networks have a surprising capacity for coming to the wrong conclusion. A neural network is a very complex network of learning elements. In the case of convolutional neural networks applied to vision, you show the network lots of photos complete with a classification and slowly they learn to correctly classify the training set. This much isn't so surprising because all you need to correctly classify any training set is a big memory. As long as the machine can detect which photo in the training set it is being presented with, it can look up its classification in a table. What is really interesting is what happens when you show the learning machine a photo it hasn't seen before. In the case of memory or rote learning it is obvious that the machine would simply shrug its shoulders and say - never seen it before. What is interesting and important about neural networks is that they have a capacity to correctly classify images that they have never seen before and weren't part of the training set. That is, neural networks learn to generalize and to many it seems that they generalize in ways that humans do. Often the most impressive thing about neural networks is how they get things wrong. When the network says that a photo of a cat is in fact a dog, you look at the photo and think "yes I can see how it might think that". Then things went wrong. Early in 2014 a paper "Intriguing properties of neural networks" by Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow and Rob Fergus, a team that includes authors from Google's deep learning research, showed that neural networks aren't quite like us - and perhaps we aren't quite like us, as well. What was found was that with a little optimization algorithm you could find examples of images that were very close to the original image that the network would misclassified. This was shocking. You could show the network a picture of a car and it would say - "car" - then you would show it a picture that to a human was identical and it would say it was something else. The existence of such adversarial images has caused a lot of controversy in the neural network community but many hoped that it could be mostly ignored - as long as adversarial images were isolated "freaks" of classification. You could even use them to improve the way a network performed by adding them to the training set. In many ways the existence of adversarial images was seen as evidence that we hadn't trained the network enough. Now we know that things aren't quite as simple. In a new paper "Exploring the space of adversarial images" Pedro Tabacof and Eduardo Valle at the University of Campinas have some very nice and easy to understand evidence that adversarial images might be more of a problem.

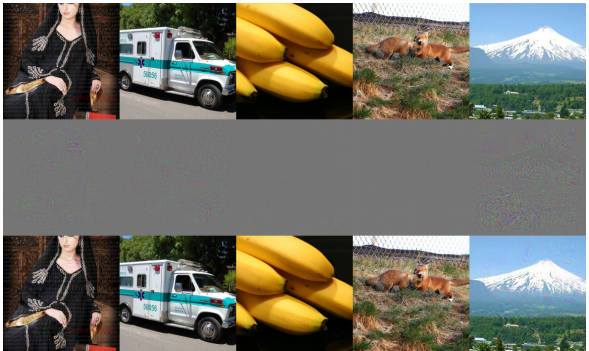

From left to right, correct labels: ‘Abaya’, ‘Ambulance’, ‘Banana’, ‘Kit Fox’, ‘Volcano’. Adversarial labels for all: ‘Bolete’ (a type of mushroom). Middle row is the difference between the two images. If you think of the input images as forming a multi-dimensional space then the different classifications divide the space into regions. It has long been assumed that these regions are complex. Adversarial images are images that are very close to the boundary of such a region. The question is how the adversarial images distributed: Are they isolated points in the pixel space, reachable only by a guided procedure with complete access to the model? Or do they inhabit large “adversarial pockets” in the space? Those questions have practical implications: if adversarial images are isolated or inhabit very thin pockets, they deserve much less worry than if they form large, compact regions. The methods used are surprisingly easy to understand. First find a adversarial image and discover how far away from the original image it is. Next generate random changes to the adversarial image so that is move a similar distance and discover if it is still adversarial. Using a simple Gaussian distribution allows a spherical region to be "probed": "we approach the problem indirectly, by probing the space around the images with small random perturbations. In regions where the manifold is nice — round, compact, occupying most of the space — the classifier will be consistent (even if wrong). In the regions where the manifold is problematic — sparse, discontinuous, occupying small fluctuating subspaces — the classifier will be erratic." They used the MNIST handwritten digits and the ImageNet databases and interesting there were some differences in the nature of the adversarial image spaces. The measure that is important is the percentage of images that keep their classification under the perturbation. It seems that adversarial images are not isolated, but they are close to the boundary of the original classification. A key finding is that the MNIST data is less vulnerable to adversarial images than the ImageNet data. For MNIST the adversarial image distortions were more obvious and they switch back to the correct classification more quickly than the ImageNet data. To explore the geometry more precisely the noise source was changed so that it had the same distribution as the distorted pixels in the adversarial set. This probed non spherical regions which are hoped to be closer to the shape of the adversarial zones. The problem is that the MNIST data the modified distributions found larger regions than the Gaussian but for the ImageNet data they found smaller regions. However in neither case does the effect seem large. Although this being presented as a flaw with neural networks it seems to be characteristic of a range of learning methods. Indeed a logistic regression classifier was used for the MNIST data and this could be the source of the differences found. "Curiously, a weak, shallow classifier (logistic regression), in a simple task (MNIST), seems less susceptible to adversarial images than a strong, deep classifier (OverFeat), in a complex task (ImageNet). The adversarial distortion for MNIST/logistic regression is more evident and humanly discernible. It is also more fragile, with more adversarial images reverting to the original at relatively lower noise levels. Is susceptibility to adversarial images an inevitable Achilles’ heel of powerful complex classifiers?" What all this means is that some how complex classifiers such as neural networks create classification regions which are more complex, more folded perhaps, and this allows pockets of adversarial images to exist. As commented previously it could also be yet another manifestation of the curse of dimensionality. As the number of dimensions increases the volume of a hypersphere becomes increasingly concentrated near its surface. In classification terms this means that as the dimension of the classification space increases any point within selected within a region is likely to be close to a boundary and hence perhaps a region of adversarial example. To quote the final sentence from the paper: "Speculative analogies with the illusions of the Human Visual System are tempting, but the most honest answer is that we still know too little. Our hope is that this article will keep the conversation about adversarial images ongoing and help further explore those intriguing properties. " So do humans have adversarial images or do we work in a way that somehow suppresses them?

More InformationExploring the Space of Adversarial Images Related ArticlesThe Deep Flaw In All Neural Networks The Flaw Lurking In Every Deep Neural Net Neural Networks Describe What They See Neural Turing Machines Learn Their Algorithms Google's Deep Learning AI Knows Where You Live And Can Crack CAPTCHA Google Uses AI to Find Where You Live Deep Learning Researchers To Work For Google Google Explains How AI Photo Search Works Google Has Another Machine Vision Breakthrough?

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 21 October 2015 ) |