| Neural Networks Beat Humans |

| Written by Mike James | |||

| Wednesday, 25 February 2015 | |||

|

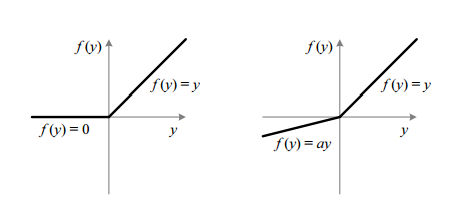

Another neural network breakthrough has been announced by Microsoft Research. Its neural network now outperforms humans on the 1000-class ImageNet dataset. Neural networks have achieved amazing results in the past few years, but until now, when it comes to visual classification, humans were better. When set the task of classifying 100,000 test images humans achieve a 5.1% error rate. Now the latest neural network has achieved a 4.94% which is a significant improvement on the previous best GoogLeNet's 6.66%. Microsoft Research's Beijing team lead by Jian Sun and Kaiming He are the same team that applied the vision resolution pyramid to speed up the calculation of deep convolutional networks. This time their improved result is based on what looks like a minor tweak. Many neural networks make use of rectifier neurons that don't output a signal until a threshold is reached. After the threshold the neuron simply reproduces the input i.e. it's linear. The new idea is add a parameter to that changes the behaviour so that the rectifier is "softer". Even in the cutoff portion its characteristic it still passes a reduced signal. This is called a Parametric Rectified Linear Unit (PReLU) and it seems to make the network better for very little extra computational cost - one parameter per neuron.

The reason that the PReLU is expected to be better is that it avoids having to use zero gradients in the backpropagtion algorithm - something that is well known to slow down learn. Apart from the change in design nothing else has to change and the extra parameter can be added to the backpropagation's gradient descent. When they tried out the new architecture they found that there was a tendency for early layers to have larger values of a, which made the neurons more linear; and later stages to have smaller values of a, gradually becoming more non-linear. This can be interpreted as the model keeping more information in the early layers and making more classifications and distinctions in later layers. A second, and more technical, improvement is to the initialization of the weights. In most cases neural networks are initialized to a random state which in some cases can make training impossible because the learning gradients become very small. There are some schemes that assign "good" random starting weights but none that apply to rectified units. By an analysis of the PReLU neuron you can arrive at a recipe for a good random set of initial weights. In some experiments, it was observed the the new initialization allowed models that failed to converge with the original initialization methods not only to converge but also to show good results. Putting all of this together allows for deeper neural networks that are faster to train. It is worth adding that training takes 3 or 4 weeks using GPUs.

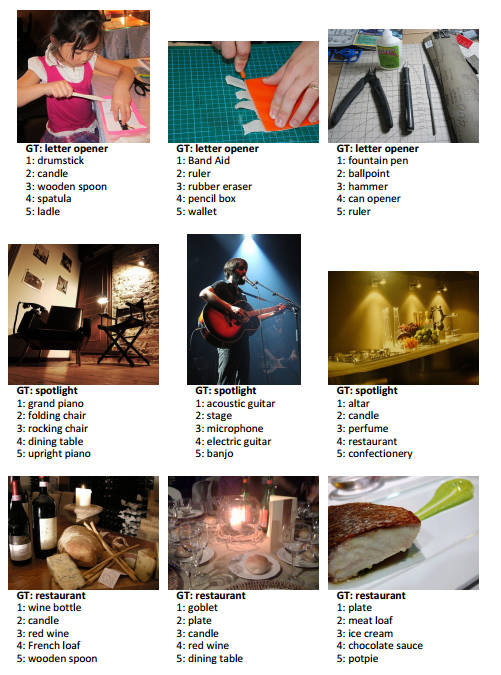

Things the network got wrong

Neural networks are now better than humans at classifying images. However the nature of the advantage is interesting. Humans are good at general recognition - it's a dog or a cat are conclusions reached very quickly, but which breed of dog or cat may be be beyond the human's ability. The neural network, on the other hand, takes almost as much work in learning the coarse-grained differences as the fine distinctions. To quote from the end of the paper: While our algorithm produces a superior result on this particular dataset, this does not indicate that machine vision outperforms human vision on object recognition in general. On recognizing elementary object categories (i.e., common objects or concepts in daily lives) such as the Pascal VOC task [6], machines still have obvious errors in cases that are trivial for humans. Nevertheless, we believe that our results show the tremendous potential of machine algorithms to match human-level performance on visual recognition. We still seem to be an age of development where almost alchemical tinkering provides worthwhile gains. We seem to have discovered that neural networks actually work but we still aren't sure exactly what works best. The network got this right - most humans just see a dog. More InformationDelving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification Microsoft Researchers' Algorithm Sets ImageNet Challenge Milestone Related ArticlesThe Deep Flaw In All Neural Networks The Flaw Lurking In Every Deep Neural Net Neural Networks Describe What They See Neural Turing Machines Learn Their Algorithms Google's Deep Learning AI Knows Where You Live And Can Crack CAPTCHA Google Uses AI to Find Where You Live Deep Learning Researchers To Work For Google Google Explains How AI Photo Search Works Google Has Another Machine Vision Breakthrough?

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 25 February 2015 ) |