| Competitive Self-Play Trains AI Well |

| Written by Mike James |

| Saturday, 14 October 2017 |

|

OpenAI has some results which are interesting even if you are not an AI expert. Set AI agents to play against each other and they spontaneously invent tackling, ducking, faking and diving - just watch the video.

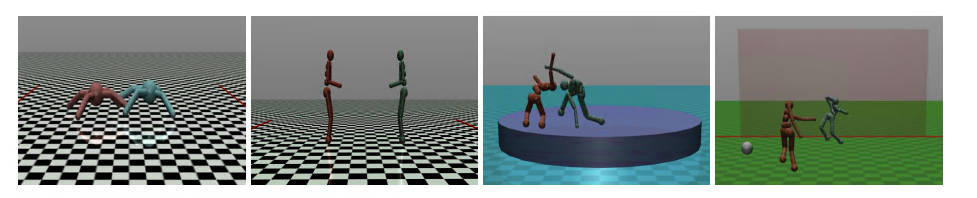

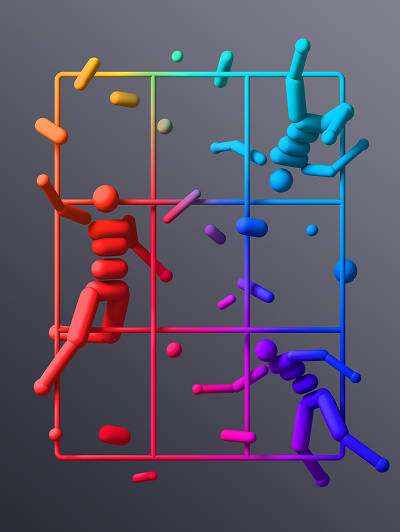

OpenAI seems to be developing its own approach to the subject It specializes in a form of reinforcement learning that features self-play. "We set up competitions between multiple simulated 3D robots on a range of basic games, trained each agent with simple goals (push the opponent out of the sumo ring, reach the other side of the ring while preventing the other agent from doing the same, kick the ball into the net or prevent the other agent from doing so, and so on), then analyzed the different strategies that emerged." To get the learning off to a start of some sort, initially the robots are rewarded for simple achievements like standing and moving forward, but slowly the reward is adjusted to be completely dependent on winning or losing. This is all that the robots get by way of training and no teacher shows them how to behave. What is surprising is that even with simple rewards the robots do develop what to us look like strategies.

Robots engage in four contact games - Run to Goal, You Shall Not Pass, Sumo and Kick and Defend:

It also seems that some of the skills learned are transferable to other situations. The sumo robots for example were found to be able to stand up while being buffeted by wind forces. By contrast robots simply trained to walk using classical reinforcement learning tended to fall over at once. If you think this all looks interesting or fun you can get all the code (in Python and using TensorFlow) on the project's GitHub page. The OpenAI blog also states: If you’d like to work on self-play systems, we’re hiring!

The project isn't stopping at this point: "We have presented several new competitive multi-agent 3D physically simulated environments. We demonstrate the development of highly complex skills in simple environments with simple rewards. In future work, it would be interesting to conduct larger scale experiments in more complex environments that encourage agents to both compete and cooperate with each other." Personally, I think that this sort of research is reaching the point where it would nice to see some physical realizations in the near future.

More InformationEmergent Complexity via Multi-Agent Competition Open AI Multiagent competition on GitHub Related ArticlesGeoffrey Hinton Says AI Needs To Start Over OpenAI Bot Triumphant Playing Dota 2 DeepLoco Learns Not Only To Walk But To Kick A Ball AI Goes Open Source To The Tune Of $1 Billion Open AI And Microsoft Exciting Times For AI OpenAI Universe - New Way of Training AIs

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Thursday, 19 October 2017 ) |