| IBM Watson and Project Intu for Embodied Cognition |

| Written by Nikos Vaggalis | |||

| Monday, 12 December 2016 | |||

|

Watson raises the bar to the quest of achieving autonomous general AI, in yet another advancement that this time looks like having an emphatic impact on the industry as a whole. The new buzzwords that Watson introduces are embodied cognition and behaviors, which act as self-contained components but work together on transforming the transaction that takes place between the human operator and the machine, be it a device, robot, or anything else capable of carrying an intelligent software agent, into a state of conversation or deeper interaction .

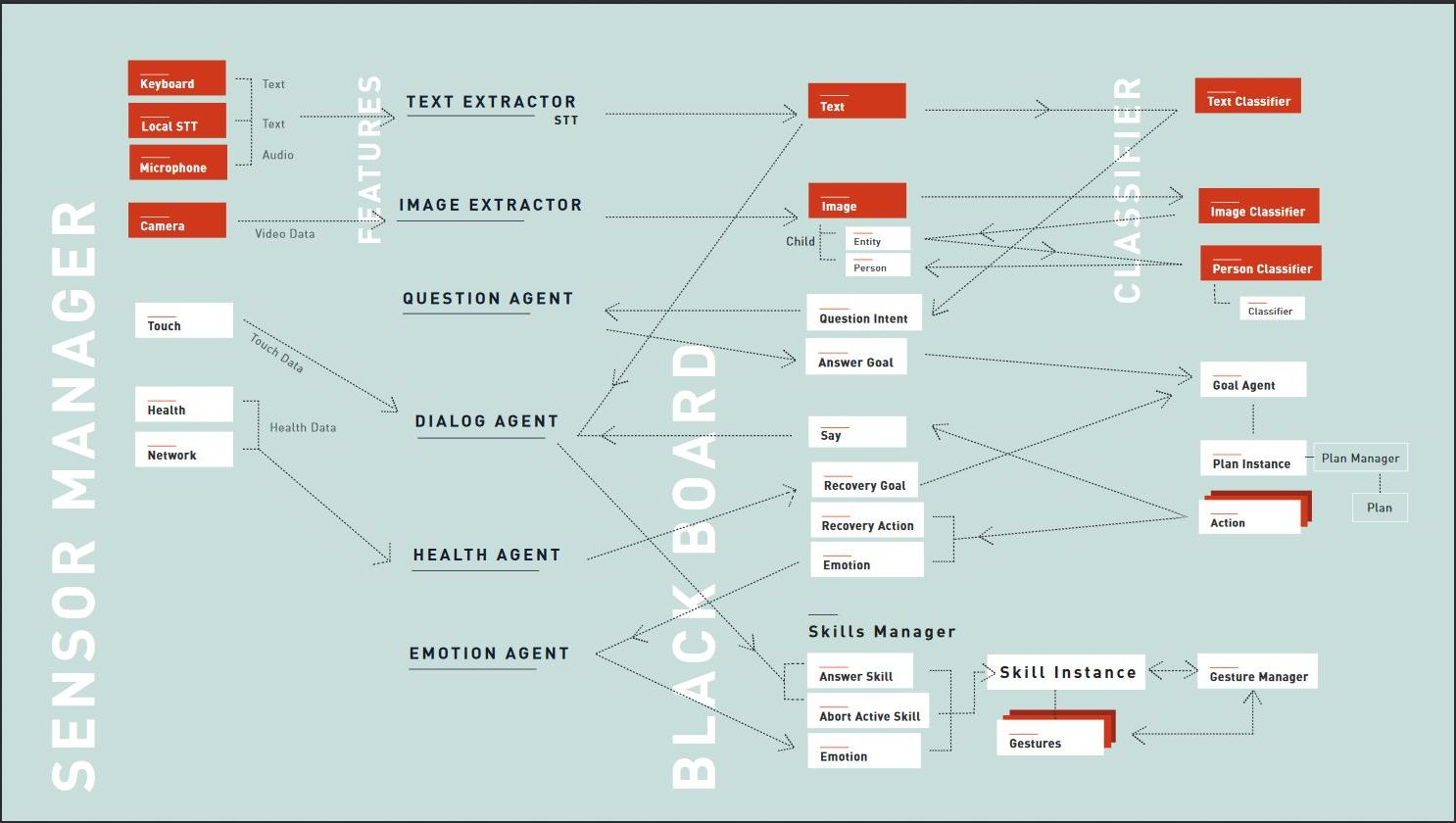

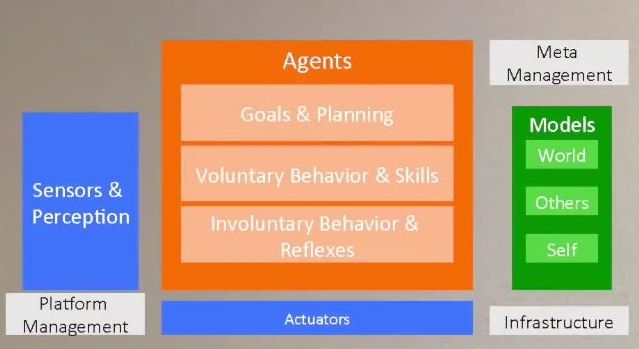

Until now, interaction with a powered by AI machine was mostly uni-directional, with the human asking a question, for example: Find me the closest Italian restaurant from my current location or prompting the AI for action with a command like: Turn the wind-shield wipers on What about the AI responding to the same questions or prompts for action with: "There is an Italian restaurant three blocks ahead but you look tired so I would suggest going for one that is even closer, but not Italian.Would you like me to re-locate or stick to the Italian one after all?" "I'll turn the wind-shield wipers on, but from the tone of your voice in commanding I gather that you're angry, therefore I'll take the liberty to slow down the car in order to stay clear of a potential accident." This sets new standards to the future of human-AI interaction, with the machine now being able to derive meaning from clues about human emotion, state or behavior, such as the tone of the voice, or a facial expression. In the examples outlined a number of behaviors come into play: the 'emotion behavior' responsible for capturing emotion through tone or facial expression analysis, as it did when reading the state of tired; the 'question behavior', responsible for understanding that you've just made a question, or even the 'gesture behavior', which would make the robotic home assistant point to the direction of the restaurant. Other behaviors could involve , 'weather' and 'time' monitoring, so the machine could offer advice such as: "It's going to rain, better get an umbrella" "Hurry up, the restaurant will close in half an hour". These behaviors/agents are compartmentalized and find themselves encapsulated inside a system called Self, the backbone architecture of Project Intu, which is the system that coordinates the cognitive mind (cognitive services) with the body (embodiment) through which it enables Watson to reason and act depending on the context of the situation at hand.

Each calling of a behavior results in a call to the corresponding Watson service residing on the BlueMix cloud platform. So, the tone analyzing service, the visual recognition service, or the conversation service, all work seamlessly together to coordinate the embodied AI to respond like a human would do.

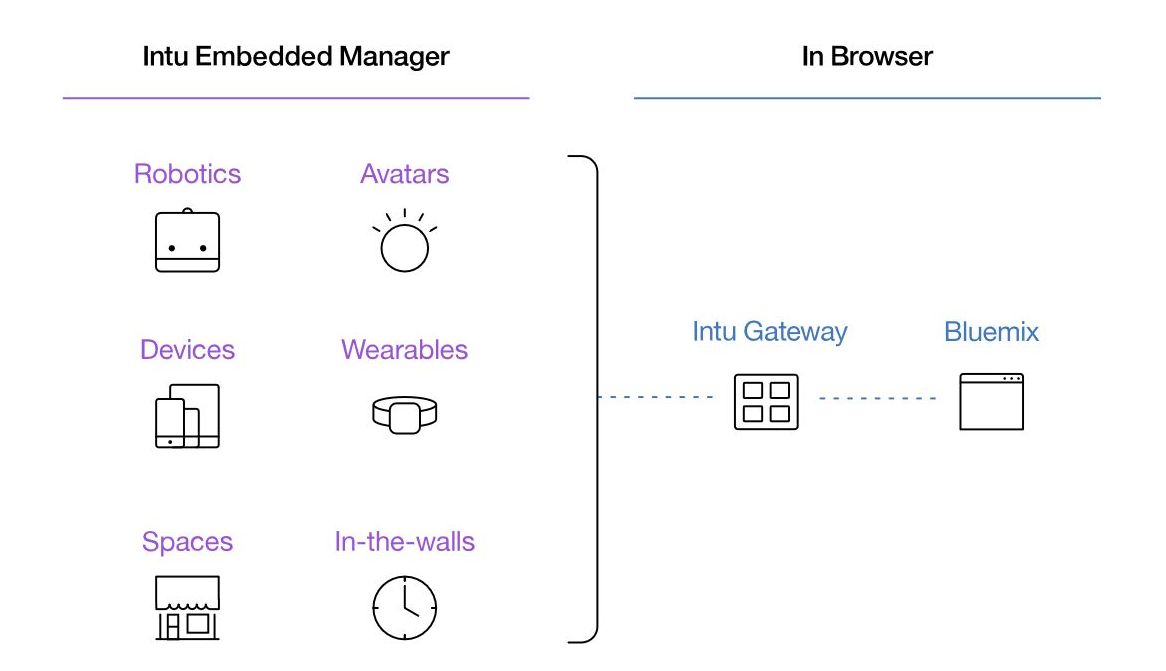

Since there is an enormous variety of devices, machines and robots, to cater for, Intu has been made capable of running across multiple platforms, from Windows and OSx to Raspberry Pi, despite still in experimental form. It is available for download from its GitHub repository and, apart from installing it following the instruction set, you'll also need a Bluemix cloud account and request access to the Watson Intu Gateway. The following video outlines the process.

More InformationRelated ArticlesIBM, Slack, Watson and the Era of Cognitive Computing $200 Million Investment In IBM Watson How Will AI Transform Life By 2030? Initial Report To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Monday, 12 December 2016 ) |